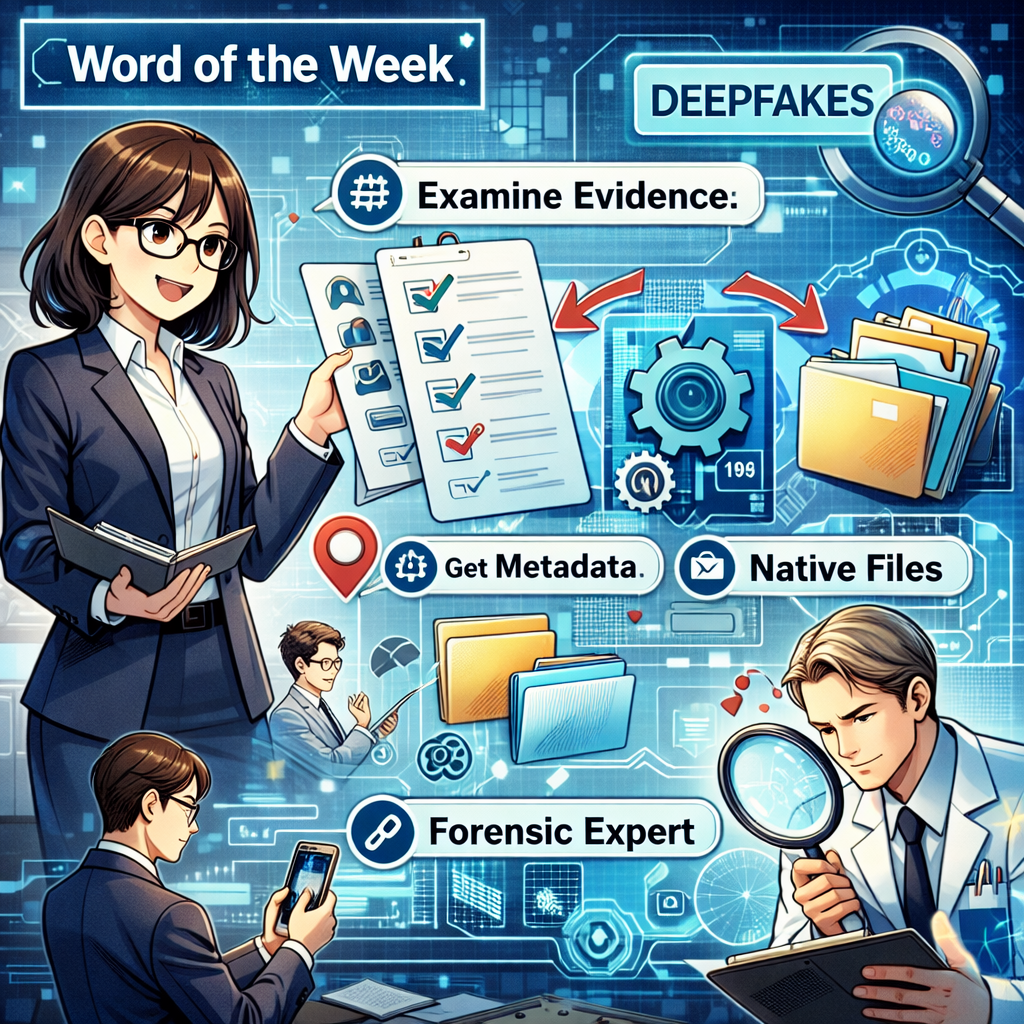

Word of the Week: Deepfakes: How Lawyers Can Spot Fake Digital Evidence and Avoid ABA Model Rule Violations ⚖️

/A Tech-Savvy Lawyer needs to be able to spot Deepfakes Before Courtroom Ethics Violations!

“Deepfakes” are AI‑generated or heavily manipulated audio, video, or images that convincingly depict people saying or doing things that never happened.🧠 They are moving from internet novelty to everyday litigation risk, especially as parties try to slip fabricated “evidence” into the record.📹

Recent cases and commentary show courts will not treat deepfakes as harmless tech problems. Judges have dismissed actions outright and imposed severe sanctions when parties submit AI‑generated or altered media, because such evidence attacks the integrity of the judicial process itself.⚖️ At the same time, courts are wary of lawyers who cry “deepfake” without real support, since baseless challenges can look like gamesmanship rather than genuine concern about authenticity.

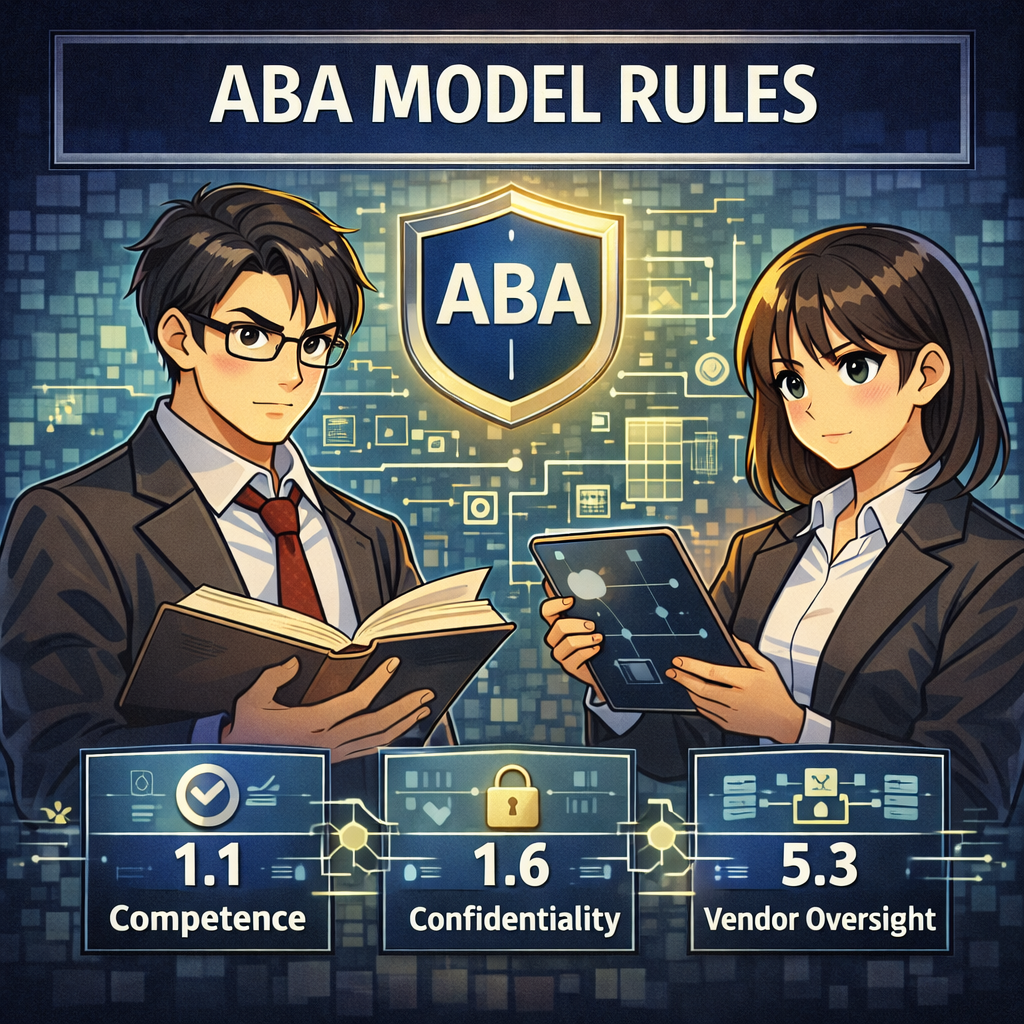

For practicing lawyers, deepfakes are first and foremost a professional responsibility issue. ABA Model Rule 1.1 (Competence) now clearly includes a duty to understand the benefits and risks of relevant technology, which includes generative AI tools that create or detect deepfakes. You do not need to be an engineer, but you should recognize common red flags, know when to request native files or metadata, and understand when to bring in a qualified forensic expert.

Deepfakes in Litigation: Detect Fake Evidence, Protect Your License!

Deepfakes also implicate Model Rule 3.3 (Candor to the tribunal) and Model Rule 3.4 (Fairness to opposing party and counsel). If you knowingly offer manipulated media, or ignore obvious signs of fabrication in your client’s “evidence,” you risk presenting false material to the court and obstructing access to truthful proof. Courts have made clear that submitting fake digital evidence can justify terminating sanctions, fee shifting, and referrals for disciplinary action.

Model Rule 8.4(c), which prohibits conduct involving dishonesty, fraud, deceit, or misrepresentation, sits in the background of every deepfake decision. A lawyer who helps create, weaponize, or strategically “look away” from deepfake evidence is not just making a discovery mistake; they may be engaging in professional misconduct. Likewise, a lawyer who recklessly accuses an opponent of using deepfakes without factual grounding risks violating duties of candor and professionalism.

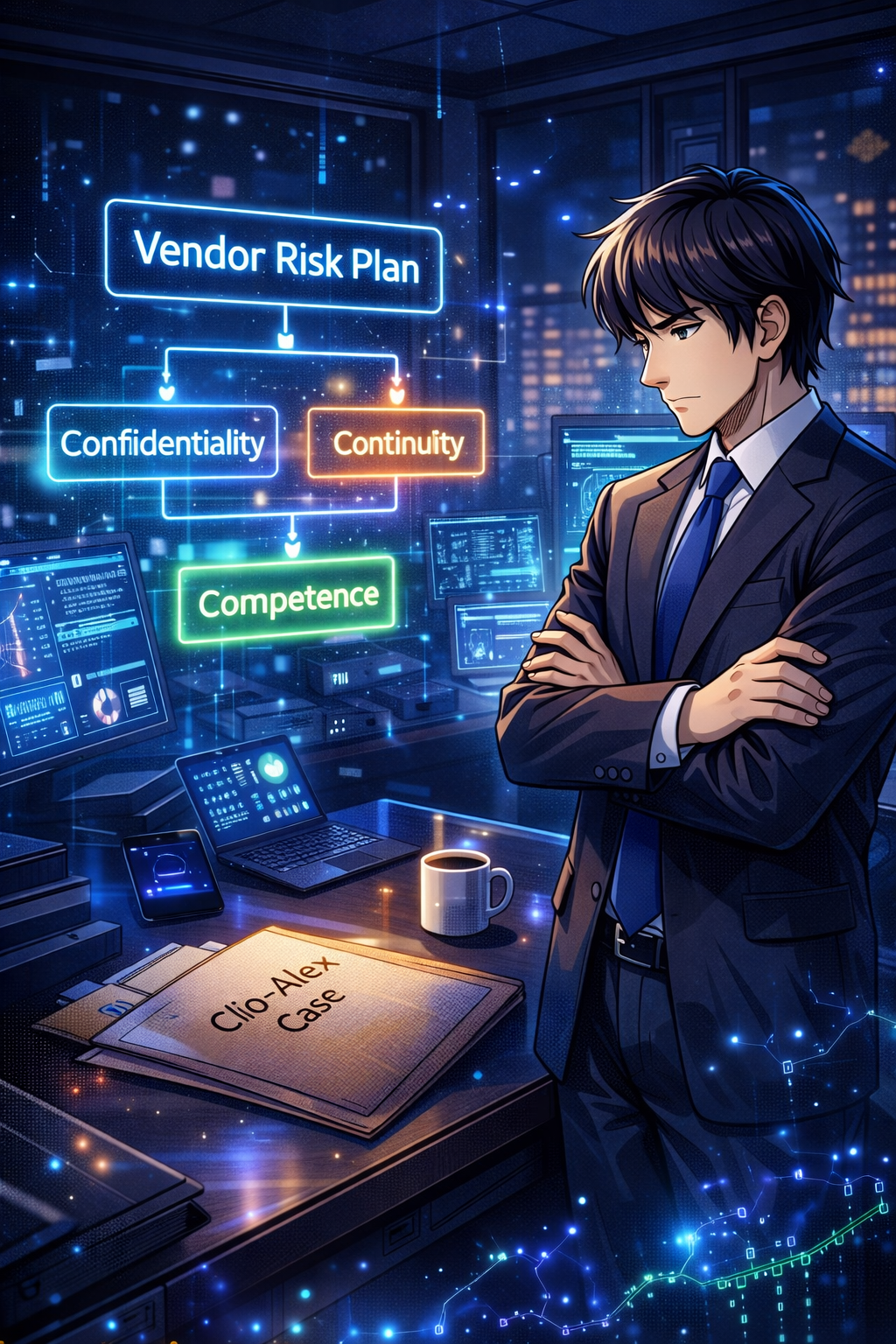

Practically, you can start protecting your clients with a few repeatable steps. Ask early in the case what digital media exists, how it was created, and who controlled the devices or accounts.🔍 Build authentication into your discovery plan, including requests for original files, device logs, and platform records that can help confirm provenance. When the stakes justify it, consult a forensic expert rather than relying on “gut feel” about whether a recording “looks real.”

lawyers need to know Deepfakes, Metadata, and ABA Ethics Rules!

Finally, talk to clients about deepfakes before they become a problem. Explain that altering media or using AI to “clean up” evidence is dangerous, even if they believe they are only fixing quality.📲 Remind them that courts are increasingly sophisticated about AI and that discovery misconduct in this area can destroy otherwise strong cases. Treat deepfakes as another routine topic in your litigation checklist, alongside spoliation and privilege, and you will be better prepared for the next “too good to be true” video that lands in your inbox.