MTC🪙🪙: When Reputable Databases Fail: What Lawyers Must Do After AI Hallucinations Reach the Court

/What should a lawyer do when they inadvertENTLY USE A HALLUCINATED CITE?

In a sobering December 2025 filing in Integrity Investment Fund, LLC v. Raoul, plaintiff's counsel disclosed what many in the legal profession feared: even reputable legal research platforms can generate hallucinated citations. The Motion to Amend Complaint revealed that "one of the cited cases in the pending Amended Complaint could not be found," along with other miscited cases, despite the legal team using LexisNexis and LEXIS+ Document Analysis tools rather than general-purpose AI like ChatGPT. The attorney expressed being "horrified" by these inexcusable errors, but horror alone does not satisfy ethical obligations.

This case crystallizes a critical truth for the legal profession: artificial intelligence remains a tool requiring rigorous human oversight, not a substitute for attorney judgment. When technology fails—and Stanford research confirms it fails at alarming rates—lawyers must understand their ethical duties and remedial obligations.

The Scope of the Problem: Even Premium Tools Hallucinate

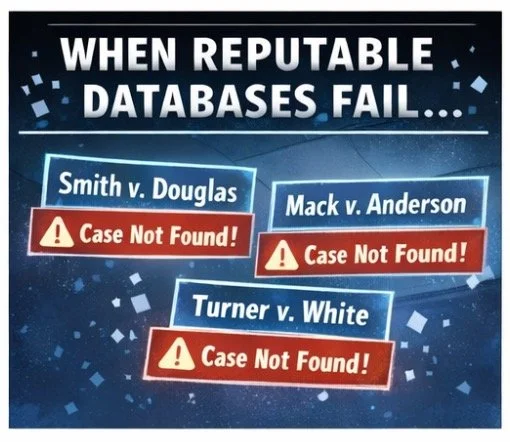

Legal AI vendors marketed their products as hallucination-resistant, leveraging retrieval-augmented generation (RAG) technology to ground responses in authoritative legal databases. Yet as reported in our 📖 WORD OF THE WEEK YEAR🥳: Verification: The 2025 Word of the Year for Legal Technology ⚖️💻, independent testing by Stanford's Human-Centered Artificial Intelligence program and RegLab reveals persistent accuracy problems. Lexis+ AI produced incorrect information 17% of the time, while Westlaw's AI-Assisted Research hallucinated at nearly double that rate—34% of queries.

These statistics expose a dangerous misconception: that specialized legal research platforms eliminate fabrication risks. The Integrity Investment Fund case demonstrates that attorneys using established, subscription-based legal databases still face citation failures. Courts nationwide have documented hundreds of cases involving AI-generated hallucinations, with 324 incidents in U.S. federal, state, and tribal courts as of late 2025. Legal professionals can no longer claim ignorance about AI limitations.

The consequences extend beyond individual attorneys. As one federal court warned, hallucinated citations that infiltrate judicial opinions create precedential contamination, potentially "sway[ing] an actual dispute between actual parties"—an outcome the court described as "scary". Each incident erodes public confidence in the justice system and, as one commentator noted, "sets back the adoption of AI in law".

The Ethical Framework: Three Foundational Rules

When attorneys discover AI-generated errors in court filings, three Model Rules of Professional Conduct establish clear obligations.

ABA Model Rule 1.1 mandates technological competence. The 2012 amendment to Comment 8 requires lawyers to "keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology". Forty-one jurisdictions have adopted this technology competence requirement. This duty is ongoing and non-delegable. Attorneys cannot outsource their responsibility to understand the tools they deploy, even when those tools carry premium price tags and prestigious brand names.

Technological competence means understanding that current AI legal research tools hallucinate at rates ranging from 17% to 34%. It means recognizing that longer AI-generated responses contain more falsifiable propositions and therefore pose a greater risk of hallucination. It means implementing verification protocols rather than accepting AI output as authoritative.

ABA Model Rule 3.3 requires candor toward the tribunal. This rule prohibits knowingly making false statements of law or fact to a court and imposes an affirmative duty to correct false statements previously made. The duty continues until the conclusion of the proceeding. Critically, courts have held that the standard under Federal Rule of Civil Procedure 11 is objective reasonableness, not subjective good faith. As one court stated, "An attorney who acts with 'an empty head and a pure heart' is nonetheless responsible for the consequences of his actions".

When counsel in Integrity Investment Fund discovered the miscitations, filing a Motion to Amend Complaint fulfilled this corrective duty. The attorney took responsibility and sought to rectify the record before the court relied on fabricated authority. This represents the ethical minimum. Waiting for opposing counsel or the court to discover errors invites sanctions and disciplinary referrals.

The duty of candor applies regardless of how the error originated. In Kaur v. Desso, a Northern District of New York court rejected an attorney's argument that time pressure justified inadequate verification, stating that "the need to check whether the assertions and quotations generated were accurate trumps all". Professional obligations do not yield to convenience or deadline stress.

ABA Model Rules 5.1 and 5.3 establish supervisory responsibilities. Managing attorneys must ensure that subordinate lawyers and non-lawyer staff comply with the Rules of Professional Conduct. When a supervising attorney has knowledge of specific misconduct and ratifies it, the supervisor bears responsibility. This principle extends to AI-assisted work product.

The Integrity Investment Fund matter reportedly involved an experienced attorney assisting with drafting. Regardless of delegation, the signing attorney retains ultimate accountability. Law firms must implement training programs on AI limitations, establish mandatory review protocols for AI-generated research, and create policies governing which tools may be used and under what circumstances. Partners reviewing junior associate work must apply heightened scrutiny to AI-assisted documents, treating them as first drafts requiring comprehensive validation.

Federal Rule of Civil Procedure 11: The Litigation Hammer

Reputable databases can hallucinate too!

Beyond professional responsibility rules, Federal Rule of Civil Procedure 11 authorizes courts to impose sanctions on attorneys who submit documents without a reasonable inquiry into the facts and law. Courts may sanction the attorney, the party, or both. Sanctions range from monetary penalties paid to the court or opposing party to non-monetary directives, including mandatory continuing legal education, public reprimands, and referrals to disciplinary authorities.

Rule 11 contains a 21-day safe harbor provision. Before filing a sanctions motion, the moving party must serve the motion on opposing counsel, who has 21 days to withdraw or correct the challenged filing. If counsel promptly corrects the error during this window, sanctions may be avoided. This procedural protection rewards attorneys who implement monitoring systems to catch mistakes early.

Courts have imposed escalating consequences as AI hallucination cases proliferate. Early cases resulted in warnings or modest fines. Recent sanctions have grown more severe. A Colorado attorney received a 90-day suspension after admitting in text messages that he failed to verify ChatGPT-generated citations. An Arizona federal judge sanctioned an attorney and required her to personally notify three federal judges whose names appeared on fabricated opinions, revoked her pro hac vice admission, and referred her to the Washington State Bar Association. A California appellate court issued a historic fine after discovering 21 of 23 quotes in an opening brief were fake.

Morgan & Morgan—the 42nd largest law firm by headcount—faced a $5,000 sanction when attorneys filed a motion citing eight nonexistent cases generated by an internal AI platform. The court divided the sanction among three attorneys, with the signing attorney bearing the largest portion. The firm's response acknowledged "great embarrassment" and promised reforms, but the reputational damage extends beyond the individual case.

What Attorneys Must Do: A Seven-Step Protocol

Legal professionals who discover AI-generated errors in filed documents must act decisively. The following protocol aligns with ethical requirements and minimizes sanctions risk:

First, immediately cease relying on the affected research. Do not file additional briefs or make oral arguments based on potentially fabricated citations. If a hearing is imminent, notify the court that you are withdrawing specific legal arguments pending verification.

Second, conduct a comprehensive audit. Review every citation in the affected filing. Retrieve and read the full text of each case or statute cited. Verify that quoted language appears in the source and that the legal propositions match the authority's actual holding. Check citation accuracy using Shepard's or KeyCite to confirm cases remain good law. This process cannot be delegated to the AI tool that generated the original errors.

Third, assess the materiality of errors. Determine whether fabricated citations formed the basis for legal arguments or appeared as secondary support. In Integrity Investment Fund, counsel noted that "the main precedents...and the...statutory citations are correct, and none of the Plaintiffs' claims were based on the mis-cited cases". This distinction affects the appropriate remedy but does not eliminate the obligation to correct the record.

Fourth, notify opposing counsel immediately. Candor extends to adversaries. Explain that you have discovered citation errors and are taking corrective action. This transparency may forestall sanctions motions and demonstrates good faith to the court.

Fifth, file a corrective pleading or motion. In Integrity Investment Fund, counsel filed a Motion to Amend Complaint under Federal Rule of Civil Procedure 15(a)(2). Alternative vehicles include motions to correct the record, errata sheets, or supplemental briefs. The filing should acknowledge the errors explicitly, explain how they occurred without shifting blame to technology, take personal responsibility, and specify the corrections being made.

Sixth, notify the court in writing. Even if opposing counsel does not move for sanctions, attorneys have an independent duty to inform the tribunal of material misstatements. The notification should be factual and direct. In cases where fabricated citations attributed opinions to real judges, courts have required attorneys to send personal letters to those judges clarifying that the citations were fictitious.

Seventh, implement systemic reforms. Review firm-wide AI usage policies. Provide training on verification requirements. Establish mandatory review checkpoints for AI-assisted work product. Consider technology solutions such as citation validation software that flags cases not found in authoritative databases. Document these reforms in any correspondence with the court or bar authorities to demonstrate that the incident prompted institutional change.

The Duty to Supervise: Training the Humans and the Machines

The Integrity Investment Fund case involved an experienced attorney assisting with drafting, yet errors reached the court. This pattern appears throughout AI hallucination cases. In the Chicago Housing Authority litigation, the responsible attorney had previously published an article on ethical considerations of AI in legal practice, yet still submitted a brief citing the nonexistent case Mack v. Anderson. Knowledge about AI risks does not automatically translate into effective verification practices.

Law firms must treat AI tools as they would junior associates—competent at discrete tasks but requiring supervision. Partners should review AI-generated research as they would first-year associate work, assuming errors exist and exercising vigilant attention to detail. Unlike human associates who learn from corrections, AI systems may perpetuate errors across multiple matters until their underlying models are retrained.

Training programs should address specific hallucination patterns. AI tools frequently fabricate case citations with realistic-sounding names, accurate-appearing citation formats, and plausible procedural histories. They misrepresent legal holdings, confuse arguments made by litigants with court rulings, and fail to respect the hierarchy of legal authority. They cite proposed legislation as enacted law and rely on overturned precedents as current authority. Attorneys must learn to identify these red flags.

Supervisory duties extend to non-lawyer staff. If a paralegal uses an AI grammar checker on a document containing confidential case strategy, the supervising attorney bears responsibility for any confidentiality breach. When legal assistants use AI research tools, attorneys must verify their work with the same rigor applied to traditional research methods.

Client Communication and Informed Consent

watch out for ai hallucinations!

Ethical obligations to clients intersect with AI usage in multiple ways. ABA Model Rule 1.4 requires attorneys to keep clients reasonably informed and to explain matters to the extent necessary for clients to make informed decisions. Several state bar opinions suggest that attorneys should obtain informed consent before inputting confidential client information into AI tools, particularly those that use data for model training.

The confidentiality analysis turns on the AI tool's data-handling practices. Many general-purpose AI platforms explicitly state in their terms of service that they use input data for model training and improvement. This creates significant privilege and confidentiality risks. Even legal-specific platforms may share data with third-party vendors or retain information on servers outside the firm's control. Attorneys must review vendor agreements, understand data flow, and ensure adequate safeguards exist before using AI tools on client matters.

When AI-generated errors reach a court filing, clients deserve prompt notification. The errors may affect litigation strategy, settlement calculations, or case outcome predictions. In extreme cases, such as when a court dismisses claims or imposes sanctions, malpractice liability may arise. Transparent communication preserves the attorney-client relationship and demonstrates that the lawyer prioritizes the client's interests over protecting their reputation.

Jurisdictional Variations: Illinois Sets the Standard

While the ABA Model Rules provide a national framework, individual jurisdictions have begun addressing AI-specific issues. Illinois, where the Integrity Investment Fund case was filed, has taken proactive steps.

The Illinois Supreme Court adopted a Policy on Artificial Intelligence effective January 1, 2025. The policy recognizes that AI presents challenges for protecting private information, avoiding bias and misrepresentation, and maintaining judicial integrity. The court emphasized "upholding the highest ethical standards in the administration of justice" as a primary concern.

In September 2025, Judge Sarah D. Smith of Madison County Circuit Court issued a Standing Order on Use of Artificial Intelligence in Civil Cases, later extended to other Madison County courtrooms. The order "embraces the advancement of AI" while mandating that tools "remain consistent with professional responsibilities, ethical standards and procedural rules". Key provisions include requirements for human oversight and legal judgment, verification of all AI-generated citations and legal statements, disclosure of expert reliance on AI to formulate opinions, and potential sanctions for submissions including "case law hallucinations, [inappropriate] statements of law, or ghost citations".

Arizona has been particularly active given the high number of AI hallucination cases in the state—second only to the Southern District of Florida. The State Bar of Arizona issued guidance calling on lawyers to verify all AI-generated research before submitting it to courts or clients. The Arizona Supreme Court's Steering Committee on AI and the Courts issued similar guidance emphasizing that judges and attorneys, not AI tools, are responsible for their work product.

Other states are following suit. California issued Formal Opinion 2015-93 interpreting technological competence requirements. The District of Columbia Bar issued Ethics Opinion 388 in April 2024, specifically addressing generative artificial intelligence in client matters. These opinions converge on several principles: competence includes understanding AI technology sufficiently to be confident it advances client interests, all AI output requires verification before use, and technology assistance does not diminish attorney accountability.

The Path Forward: Responsible AI Integration

The legal profession stands at a crossroads. AI tools offer genuine efficiency gains—automated document review, pattern recognition in discovery, preliminary legal research, and jurisdictional surveys. Rejecting AI entirely would place practitioners at a competitive disadvantage and potentially violate the duty to provide competent, efficient representation.

Yet uncritical adoption invites the disasters documented in hundreds of cases nationwide. The middle path provided by the Illinois courts requires human oversight and legal judgment at every stage.

Attorneys should adopt a "trust but verify" approach. Use AI for initial research, document drafting, and analytical tasks, but implement mandatory verification protocols before any work product leaves the firm. Treat AI-generated citations as provisional until independently confirmed. Read cases rather than relying on AI summaries. Check the currency of legal authorities. Confirm that quotations appear in the cited sources.

Law firms should establish tiered AI usage policies. Low-risk applications such as document organization or calendar management may require minimal oversight. High-risk applications, including legal research, brief writing, and client advice, demand multiple layers of human review. Some uses—such as inputting highly confidential information into general-purpose AI platforms—should be prohibited entirely.

Billing practices must evolve. If AI reduces the time required for legal research from eight hours to two hours, the efficiency gain should benefit clients through lower fees rather than inflating attorney profits. Clients should not pay both for AI tool subscriptions and for the same number of billable hours as traditional research methods would require. Transparent billing practices build client trust and align with fiduciary obligations.

Lessons from Integrity Investment Fund

The Integrity Investment Fund case offers several instructive elements. First, the attorney used a reputable legal database rather than a general-purpose AI. This demonstrates that brand name and subscription fees do not guarantee accuracy. Second, the attorney discovered the errors and voluntarily sought to amend the complaint rather than waiting for opposing counsel or the court to raise the issue. This proactive approach likely mitigated potential sanctions. Third, the attorney took personal responsibility, describing himself as "horrified" rather than deflecting blame to the technology.

The court's response also merits attention. Rather than immediately imposing sanctions, the court directed defendants to respond to the motion to amend and address the effect on pending motions to dismiss. This measured approach recognizes that not all AI-related errors warrant the most severe consequences, particularly when counsel acts promptly to correct the record. Defendants agreed that "the striking of all miscited and non-existent cases [is] proper", suggesting that cooperation and candor can lead to reasonable resolutions.

The fact that "the main precedents...and the...statutory citations are correct" and "none of the Plaintiffs' claims were based on the mis-cited cases" likely influenced the court's analysis. This underscores the importance of distinguishing between errors in supporting citations versus errors in primary authorities. Both require correction, but the latter carries greater risk of case-dispositive consequences and sanctions.

The Broader Imperative: Preserving Professional Judgment

Lawyers must verify their AI work!

Judge Castel's observation in Mata v. Avianca that "many harms flow from the submission of fake opinions" captures the stakes. Beyond individual case outcomes, AI hallucinations threaten systemic values: judicial efficiency, precedential reliability, adversarial fairness, and public confidence in legal institutions.

Attorneys serve as officers of the court with special obligations to the administration of justice. This role cannot be automated. AI lacks the judgment to balance competing legal principles, to assess the credibility of factual assertions, to understand client objectives in their full context, or to exercise discretion in ways that advance both client interests and systemic values.

The attorney in Integrity Investment Fund learned a costly lesson that the profession must collectively absorb: reputable databases, sophisticated algorithms, and expensive subscriptions do not eliminate the need for human verification. AI remains a tool—powerful, useful, and increasingly indispensable—but still just a tool. The attorney who signs a pleading, who argues before a court, and who advises a client bears professional responsibility that technology cannot assume.

As AI capabilities expand and integration deepens, the temptation to trust automated output will intensify. The profession must resist that temptation. Every citation requires verification. Every legal proposition demands confirmation. Every AI-generated document needs human review. These are not burdensome obstacles to efficiency but essential guardrails protecting clients, courts, and the justice system itself.

When errors occur—and the statistics confirm they will occur with disturbing frequency—attorneys must act immediately to correct the record, accept responsibility, and implement reforms preventing recurrence. Horror at one's mistakes, while understandable, satisfies no ethical obligation. Action does.

MTC