MTC: “Legal AI institutional memory” engages core ethics duties under the ABA Model Rules, so it is not optional “nice to know” tech.⚖️🤖

/Institutional Memory Meets the ABA Model Rules

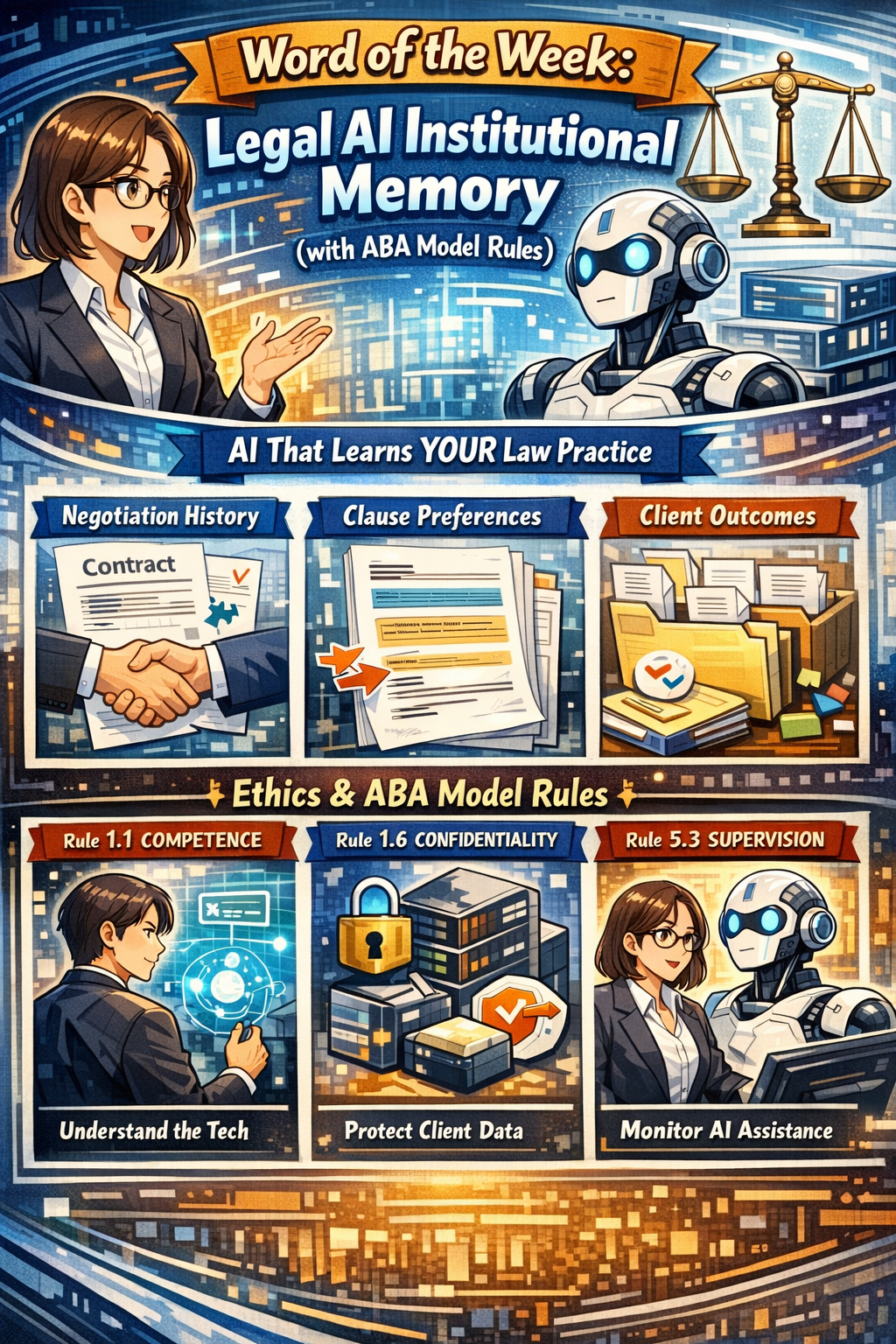

“Legal AI institutional Memory” is AI that remembers how your firm actually practices law, not just what generic precedent says. It captures negotiation history, clause choices, outcomes, and client preferences across matters so each new assignment starts from experience instead of a blank page.

From an ethics perspective, this capability sits directly in the path of ABA Model Rule 1.1 on competence, Rule 1.6 on confidentiality, and Rule 5.3 on responsibilities regarding nonlawyer assistance (which now includes AI systems). Comment 8 to Rule 1.1 stresses that competent representation requires understanding the “benefits and risks associated with relevant technology,” which squarely includes institutional‑memory AI in 2026. Using or rejecting this technology blindly can itself create risk if your peers are using it to deliver more thorough, consistent, and efficient work.🧩

Rule 1.6 requires “reasonable efforts” to prevent unauthorized disclosure or access to information relating to representation. Because institutional memory centralizes past matters and sensitive patterns, it raises the stakes on vendor security, configuration, and firm governance. Rule 5.3 extends supervision duties to “nonlawyer assistance,” which ethics commentators and bar materials now interpret to include AI tools used in client work. In short, if your AI is doing work that would otherwise be done by a human assistant, you must supervise it as such.🛡️

Why Institutional Memory Matters (Competence and Client Service)

Tools like Luminance and Harvey now market institutional‑memory features that retain negotiation patterns, drafting preferences, and matter‑level context across time. They promise faster contract cycles, fewer errors, and better use of a firm’s accumulated know‑how. Used wisely, that aligns with Rule 1.1’s requirement that you bring “thoroughness and preparation” reasonably necessary for the representation, and Comment 8’s directive to keep abreast of relevant technology.

At the same time, ethical competence does not mean turning judgment over to the model. It means understanding how the system makes recommendations, what data it relies on, and how to validate outputs against your playbooks and client instructions. Ethics guidance on generative AI emphasizes that lawyers must review AI‑generated work product, verify sources, and ensure that technology does not substitute for legal judgment. Legal AI institutional memory can enhance competence only if you treat it as an assistant you supervise, not an oracle you obey.⚙️

Legal AI That Remembers Your Practice—Ethics Required, Not Optional

How Legal AI Institutional Memory Works (and Where the Rules Bite)

Institutional‑memory platforms typically:

Ingest a corpus of contracts or matters.

Track negotiation moves, accepted fall‑backs, and outcomes over time.

Expose that knowledge through natural‑language queries and drafting suggestions.

That design engages several ethics touchpoints:

Rule 1.1 (Competence): You must understand at a basic level how the AI uses and stores client information, what its limitations are, and when it is appropriate to rely on its suggestions. This may require CLE, vendor training, or collaboration with more technical colleagues until you reach a reasonable level of comfort.

Rule 1.6 (Confidentiality): You must ensure that the vendor contract, configuration, and access controls provide “reasonable efforts” to protect confidentiality, including encryption, role‑based access, and breach‑notification obligations. Ethics guidance on cloud and AI use stresses the need to investigate provider security, retention practices, and rights to use or mine your data.

Rule 5.3 (Nonlawyer Assistance): Because AI tools are “non‑human assistance,” you must supervise their work as you would a contract review outsourcer, document vendor, or litigation support team. That includes selecting competent providers, giving appropriate instructions, and monitoring outputs for compliance with your ethical obligations.🤖

Governance Checklist: Turning Ethics into Action

For lawyers with limited to moderate tech skills, it helps to translate the ABA Model Rules into a short adoption checklist.✅

When evaluating or deploying legal AI institutional memory, consider:

Define Scope (Rules 1.1 and 1.6): Start with a narrow use case such as NDAs or standard vendor contracts, and specify which documents the system may use to build its memory.

Vet the Vendor (Rules 1.6 and 5.3): Ask about data segregation, encryption, access logs, regional hosting, subcontractors, and incident‑response processes; confirm clear contractual obligations to preserve confidentiality and notify you of incidents.

Configure Access (Rules 1.6 and 5.3): Use role‑based permissions, client or matter scoping, and retention settings that match your existing information‑governance and legal‑hold policies.

Supervise Outputs (Rules 1.1 and 5.3): Require that lawyers review AI suggestions, verify sources, and override recommendations where they conflict with client instructions or risk tolerance.

Educate Your Team (Rule 1.1): Provide short trainings on how the system works, what it remembers, and how the Model Rules apply; document this as part of your technology‑competence efforts.

Educating Your Team Is Core to AI Competence

This approach respects the increasing bar on technological competence while protecting client information and maintaining human oversight.⚖️

This approach respects the increasing bar on technological competence while protecting client information and maintaining human oversight.⚖️