MTC: The Hidden Danger in Your Firm: Why We Must Teach the Difference Between “Open” and “Closed” AI!

/Does your staff understand the difference between “free” and “paid” aI? Your license could depend on it!

I sit on an advisory board for a school that trains paralegals. We meet to discuss curriculum. We talk about the future of legal support. In a recent meeting, a presentation by a private legal research company caught my attention. It stopped me cold. The topic was Artificial Intelligence. The focus was on use and efficiency. But something critical was missing.

The lesson did not distinguish between public-facing and private tools. It treated AI as a monolith. This is a dangerous oversimplification. It is a liability waiting to happen.

We are in a new era of legal technology. It is exciting. It is also perilous. The peril comes from confusion. Specifically, the confusion between paid, closed-system legal research tools and public-facing generative AI.

Your paralegals, law clerks, and staff use these tools. They use them to draft emails. They use them to summarize depositions. Do they know where that data goes? Do you?

The Two Worlds of AI

There are two distinct worlds of AI in our profession.

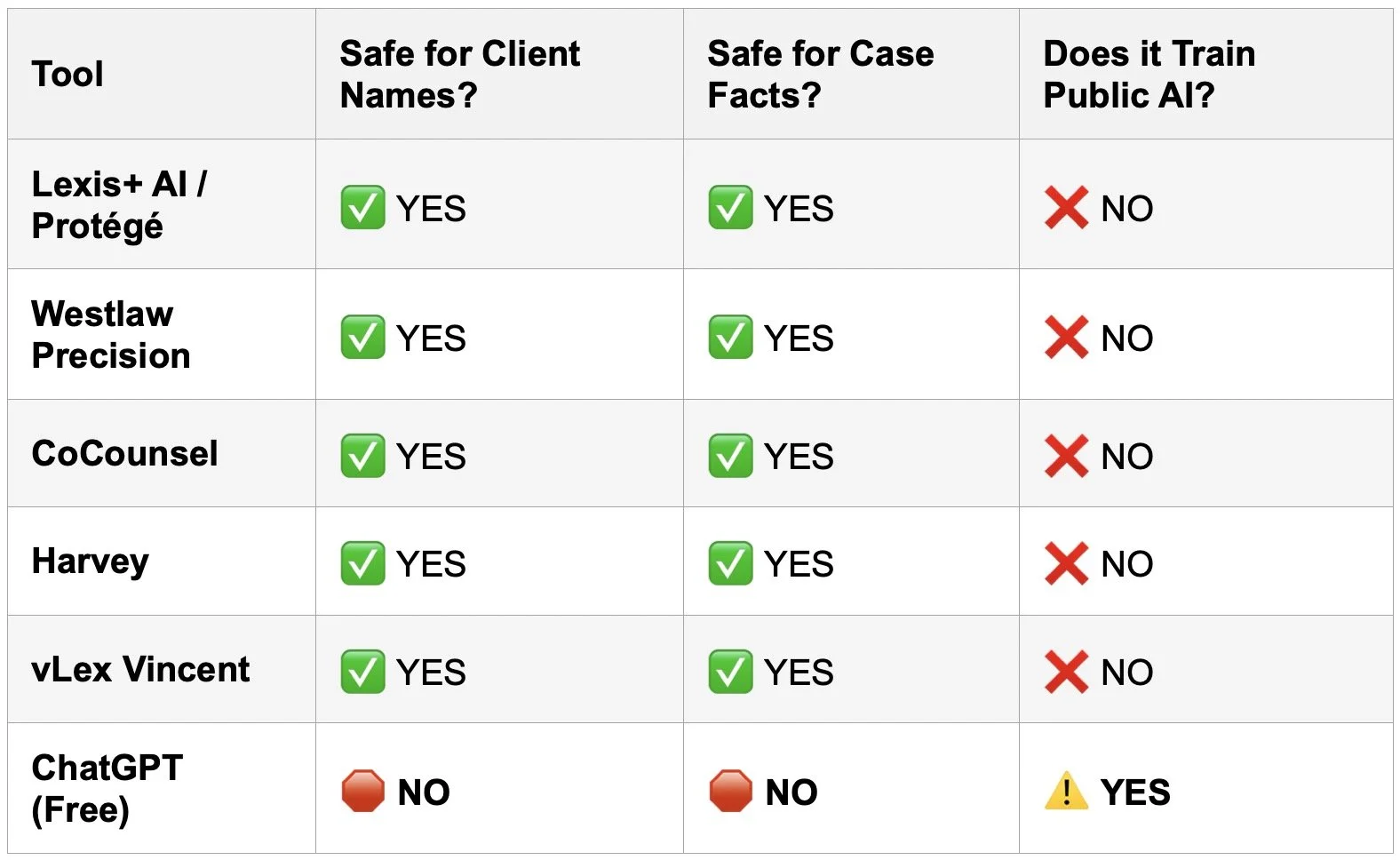

First, there is the world of "Closed" AI. These are the tools we pay for - i.e., Lexis+/Protege, Westlaw Precision, Co-Counsel, Harvey, vLex Vincent, etc. These platforms are built for lawyers. They are walled gardens. You pay a premium for them. (Always check the terms and conditions of your providers.) That premium buys you more than just access. It buys you privacy. It buys you security. When you upload a case file to Westlaw, it stays there. The AI analyzes it. It does not learn from it for the public. It does not share your client’s secrets with the world. The data remains yours. The confidentiality is baked in.

Then, there is the world of "Open" or "Public" AI. This is ChatGPT. This is Perplexity. This is Claude. These tools are miraculous. But they are also voracious learners.

When you type a query into the free version of ChatGPT, you are not just asking a question. You are training the model. You are feeding the beast. If a paralegal types, "Draft a motion to dismiss for John Doe, who is accused of embezzlement at [Specific Company]," that information leaves your firm. It enters a public dataset. It is no longer confidential.

This is the distinction that was missing from the lesson plan. It is the distinction that could cost you your license.

The Duty to Supervise

Do you and your staff know when you can and can’t use free AI in your legal work?

You might be thinking, "I don't use ChatGPT for client work, so I'm safe." You are wrong.

You are not the only one doing the work. Your staff is doing the work. Your paralegals are doing the work.

Under the ABA Model Rules of Professional Conduct, you are responsible for them. Look at Rule 5.3. It covers "Responsibilities Regarding Nonlawyer Assistance." It is unambiguous. You must make reasonable efforts to ensure your staff's conduct is compatible with your professional obligations.

If your paralegal breaches confidentiality using AI, it is your breach. If your associate hallucinates a case citation using a public LLM, it is your hallucination.

This connects directly to Rule 1.1, Comment 8. This represents the duty of technology competence. You cannot supervise what you do not understand. You must understand the risks associated with relevant technology. Today, that means understanding how Large Language Models (LLMs) handle data.

The "Hidden AI" Problem

I have discussed this on The Tech-Savvy Lawyer.Page Podcast. We call it the "Hidden AI" crisis. AI is creeping into tools we use every day. It is in Adobe. It is in Zoom. It is in Microsoft 365.

Public-facing AI is useful. I use it. I love it for marketing. I use it for brainstorming generic topics. I use it to clean up non-confidential text. But I never trust it with a client's name. I never trust it with a very specific fact pattern.

A paid legal research tool is different. It is a scalpel. It is precise. It is sterile. A public chatbot is a Swiss Army knife found on the sidewalk. It might work. But you don't know where it's been.

The Training Gap

The advisory board meeting revealed a gap. Schools are teaching students how to use AI. They are teaching prompts. They are teaching speed. They are not emphasizing the where.

The "where" matters. Where does the data go?

We must close this gap in our own firms. You cannot assume your staff knows the difference. To a digital native, a text box is a text box. They see a prompt window in Westlaw. They see a prompt window in ChatGPT. They look the same. They act the same.

They are not the same.

One protects you. The other exposes you.

A Practical Solution

I have written about this in my blog posts regarding AI ethics. The solution is not to ban AI. That is impossible. It is also foolish. AI is a competitive advantage.

* Always check the terms of use in your agreements with private platforms to determine if your client confidential data and PII are protected.

The solution is policies and training.

Audit Your Tools. Know what you have. Do you have an enterprise license for ChatGPT? If so, your data might be private. If not, assume it is public.

Train on the "Why." Don't just say "No." Explain the mechanism. Explain that public AI learns from inputs. Use the analogy of a confidential conversation in a crowded elevator versus a private conference room.

Define "Open" vs. "Closed." Create a visual guide. List your "Green Light" tools (Westlaw, Lexis, etc.). List your "Red Light" tools for client data (Free ChatGPT, personal Gmail, etc.).

Supervise Output. Review the work. AI hallucinates. Even paid tools can make mistakes. Public tools make up cases entirely. We have all seen the headlines. Don't be the next headline.

The Expert Advantage

The line between “free” and “paid” ai could be a matter of keeping your bar license!

On The Tech-Savvy Lawyer.Page, I often say that technology should make us better lawyers, not lazier ones.

Using Lexis+/Protege, Westlaw Precision, Co-Counsel, Harvey, vLex Vincent, etc. is about leveraging a curated, verified database. It is about relying on authority. Using a public LLM for legal research is about rolling the dice.

Your license is hard-earned. Your reputation is priceless. Do not risk them on a free chatbot.

The lesson from the advisory board was clear. The schools are trying to keep up. But the technology moves faster than the curriculum. It is up to us. We are the supervisors. We are the gatekeepers.

Take time this week. Gather your team. Ask them what tools they use. You might be surprised. Then, teach them the difference. Show them the risks.

Be the tech-savvy lawyer your clients deserve. Be the supervisor the Rules require.

The tools are here to stay. Let’s use them effectively. Let’s use them ethically. Let’s use them safely.

MTC