Redaction Fails & Ethical Tales: What the Epstein Files Teach Us About Data Security

/Join us in this Google Notebook LLM-powered discussion on last week’s How To: Redact PDF Documents Properly and Recover Data from Failed Redactions: A Guide for Lawyers After the DOJ Epstein Files Release “Leak”. Watch this informative presentation: Perfect for those who prefer to watch, listen and learn. In this episode, we dissect the Department of Justice’s recent high-profile failure to properly redact the Epstein files—a blunder that exposed sensitive information through a simple "copy and paste." We explore the critical difference between hiding data and destroying it, the technical steps to ensure your redactions are permanent, and the complex ethical obligations under ABA Model Rule 4.4 for both the sender and the receiver of inadvertently disclosed information.

In our conversation, we cover the following:

[00:00:00] – Introduction: Introduction to the tech lessons from the DOJ’s redaction failure.

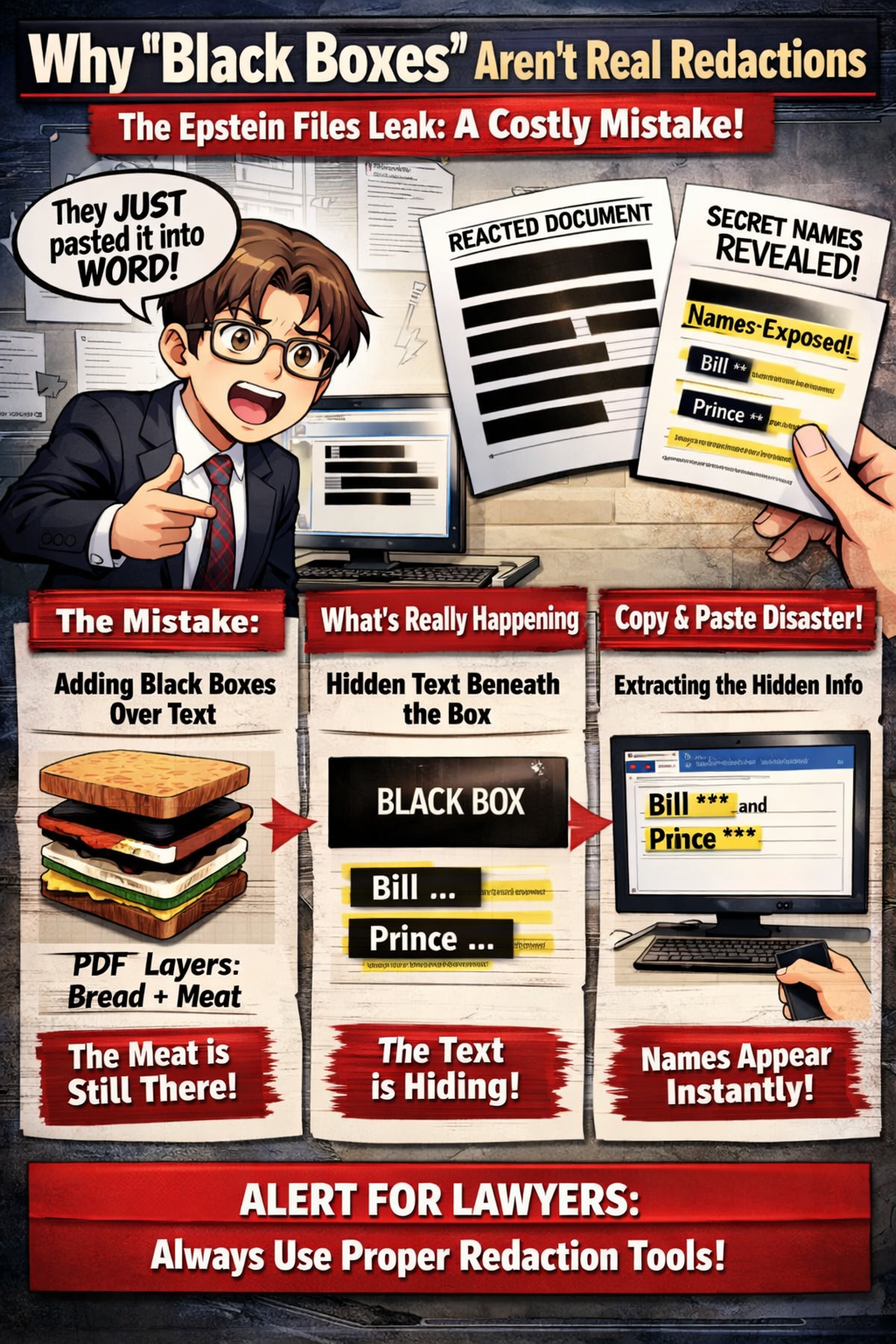

[00:00:30] – The Golden Rule of Data Redaction: Why hiding text isn't the same as destroying it.

[00:01:00] – Case Study: The Epstein Files release and the "sophisticated" hack that wasn't (it was just a bad redaction).

[00:01:30] – The Sticky Note Analogy: Visualizing the difference between masking data and digitally shredding it.

[00:02:00] – The 5-Step Safety Check: How to use dedicated tools to sanitize documents and remove metadata properly.

[00:02:40] – The "Select All" Trick: Demonstrating how easily black boxes can be bypassed without hacking skills.

[00:03:30] – The Sender’s Liability: Analyzing the Duty of Competence and Duty of Confidentiality in the digital age.

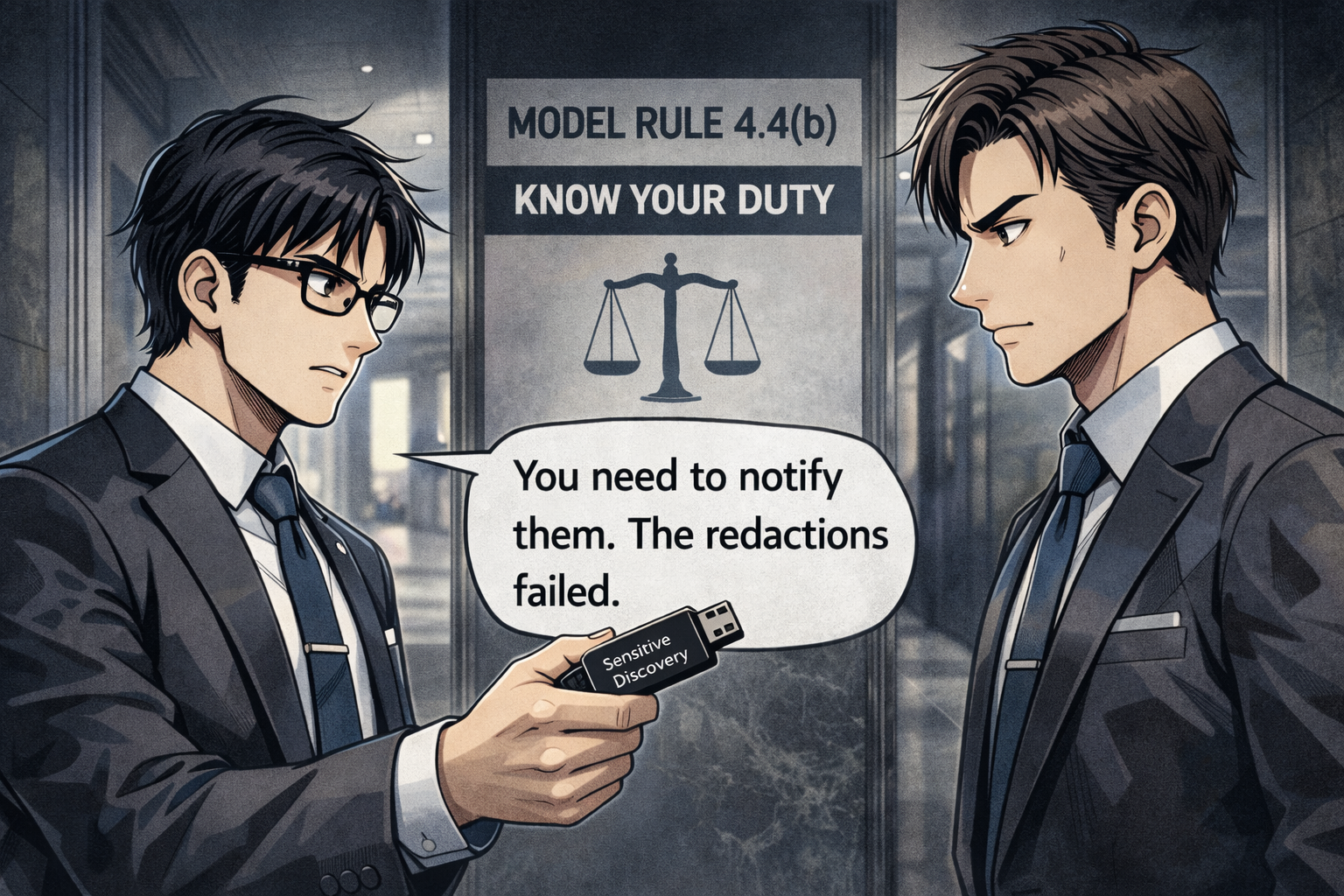

[00:04:15] – The Receiver’s Ethical Dilemma: Navigating ABA Rule 4.4 when you receive information you weren't supposed to see.

[00:05:00] – Conclusion & Call to Action: Steps to stay ahead in legal tech.

RESOURCES:

Mentioned in the episode:

American Bar Association Model Rule 4.4 (Respect for Rights of Third Persons): https://www.americanbar.org/groups/professional_responsibility/publications/model_rules_of_professional_conduct/rule_4_4_respect_for_rights_of_third_persons/

Software & Cloud Services mentioned in the conversation:

Google NotebookLM: https://notebooklm.google.com/

PDF Redaction Tools (General Reference): https://www.adobe.com/acrobat/online/pdf-editor.html