Word of the Week: What is a “Token” in AI parlance?

/Lawyers need to know what “tokens” are in ai jargon!

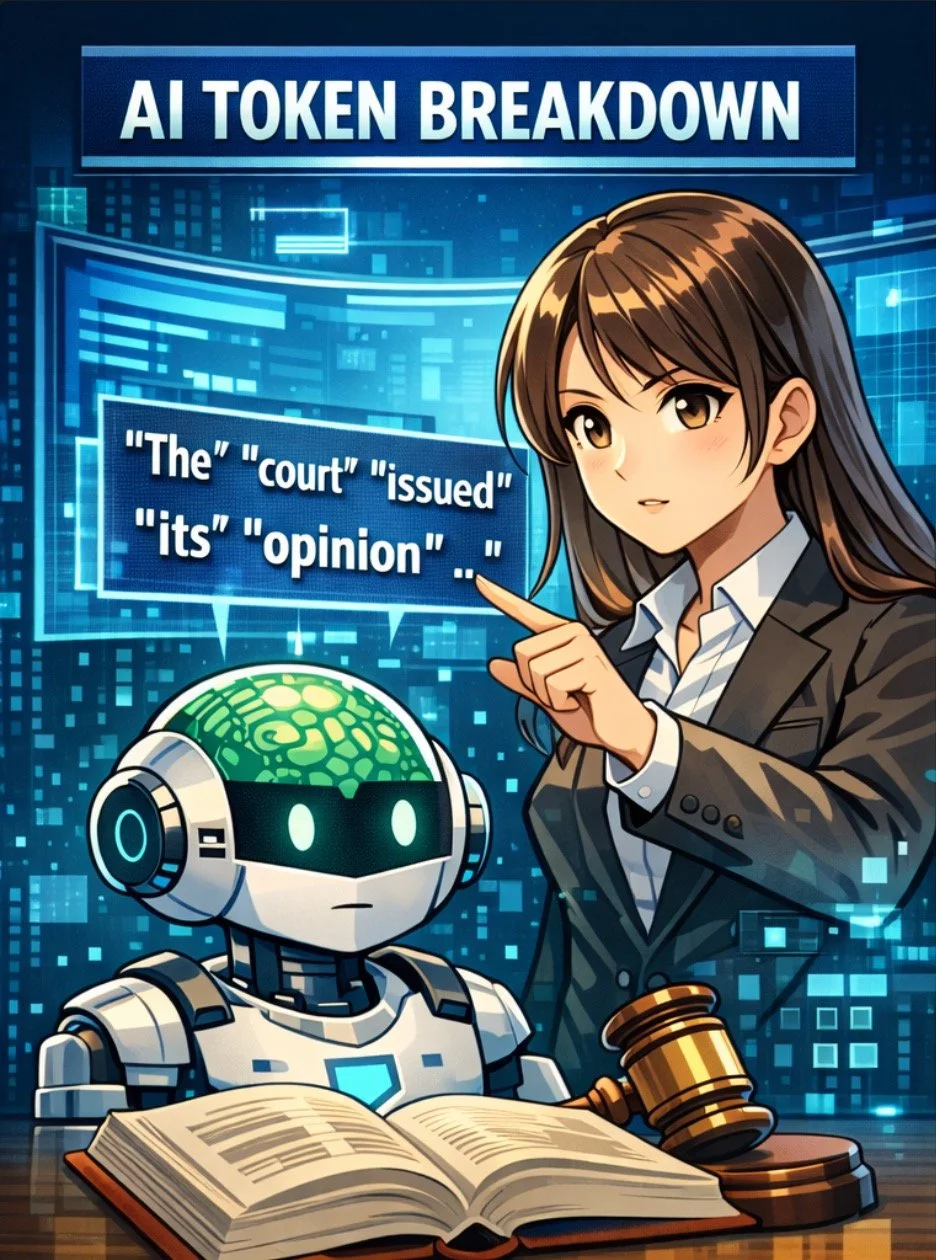

In artificial intelligence, a “token” is a small segment of text—such as a word, subword, or even punctuation—that AI tools like ChatGPT or other large language models (LLMs) use to understand and generate language. In simple terms, tokens are the “building blocks” of communication for AI. When you type a sentence, the system breaks it into tokens so it can analyze meaning, predict context, and produce a relevant response.

For example, the sentence “The court issued its opinion.” might be split into six tokens: “The,” “court,” “issued,” “its,” “opinion,” and “.” By interpreting how those tokens relate, the AI produces natural and coherent language that feels human-like.

This concept matters to law firms and practitioners because AI systems often measure capacity and billing by token count, not by word count. AI-powered tools used for document review, legal research, and e-discovery commonly calculate both usage and cost based on the number of tokens processed. Naturally, longer or more complex documents consume more tokens and therefore cost more to analyze. As a result, a lawyer’s AI platform may also be limited in how much discovery material it can process at once, depending on the platform’s token capacity.

lawyers have an ethical duty to know how tokens apply when using ai in their legal work!~

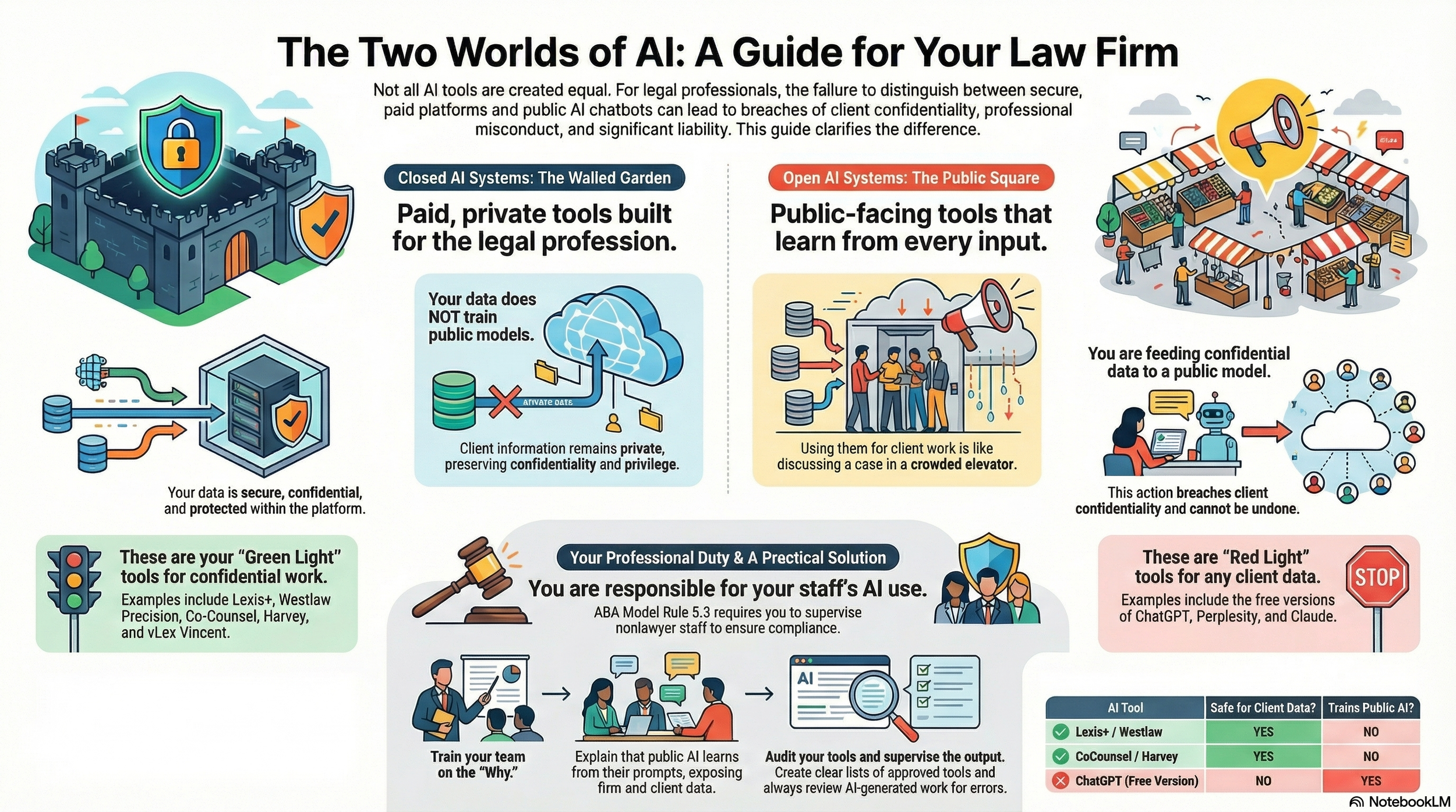

But there’s a second, more important dimension to tokens: ethics and professional responsibility. The ABA Model Rules of Professional Conduct—particularly Rules 1.1 (Competence), 1.6 (Confidentiality of Information), and 5.3 (Responsibilities Regarding Nonlawyer Assistance)—apply directly when lawyers use AI tools that process client data.

Rule 1.1 requires technological competence. Attorneys must understand how their chosen AI tools function, at least enough to evaluate token-based costs, data use, and limitations.

Rule 1.6 restricts how client confidential information may be shared or stored. Submitting text to an AI system means tokens representing that text may travel through third-party servers or APIs. Lawyers must confirm the AI tool’s data handling complies with client confidentiality obligations.

Rule 5.3 extends similar oversight duties when relying on vendors that provide AI-based services. Understanding what happens to client data at the token level helps attorneys fulfill those responsibilities.

a “token” is a small segment of text.

In short, tokens are not just technical units. They represent the very language of client matters, billing data, and confidential work. Understanding tokens helps lawyers ensure efficient billing, maintain confidentiality, and stay compliant with professional ethics rules while embracing modern legal technology.

Tokens may be tiny units of text—but for lawyers, they’re big steps toward ethical, informed, and confident use of AI in practice. ⚖️💡