MTC: PornHub Breach: Cybersecurity Wake-Up Call for Lawyers

/Lawyers are the first line defenders for their clientS’ pii.

It's the start of the New Year, and as good a time as any to remind the legal profession of their cybersecurity obligations! The recent PornHub data exposure reveals critical vulnerabilities every lawyer must address under ABA ethical obligations. Third-party analytics provider Mixpanel suffered a breach compromising user email addresses, triggering targeted sextortion campaigns. This incident illuminates three core security domains for legal professionals while highlighting specific duties under ABA Model Rules 1.1, 1.6, 5.1, 5.3, and Formal Opinion 483.

Understanding the Breach and Its Legal Implications

The PornHub incident demonstrates how failures by third-party vendors can lead to cascading security consequences. When Mixpanel's systems were compromised, attackers gained access to email addresses that now fuel sextortion schemes. Criminals threaten to expose purported adult site usage unless victims pay cryptocurrency ransoms. For law firms, this scenario is not hypothetical—your practice management software, cloud storage providers, and analytics tools present identical vulnerabilities. Each third-party vendor represents a potential entry point for attackers targeting your client data.

ABA Model Rule 1.1: The Foundation of Technology Competence

ABA Model Rule 1.1 requires lawyers to provide competent representation, and Comment 8 explicitly extends this duty to technology: "To maintain the requisite knowledge and skill, a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology". This is not a suggestion—it is an ethical mandate. Thirty-one states have adopted this technology competence requirement into their professional conduct rules.

What does this mean practically? You must understand the security implications of every technology tool your firm uses. Before onboarding any platform, conduct due diligence on the vendor's security practices. Require SOC 2 compliance, cyber insurance verification, and detailed security questionnaires. The "reasonable efforts" standard does not demand perfection, but it does require informed decision-making. You cannot delegate technology competence entirely to IT consultants. You must understand enough to ask the right questions and evaluate the answers meaningfully.

ABA Model Rule 1.6: Safeguarding Client Information in Digital Systems

Rule 1.6 establishes your duty of confidentiality, and Comment 18 requires "reasonable efforts to prevent [the inadvertent or unauthorized] access or disclosure” to information relating to the representation of a client. This duty extends beyond privileged communications to all client-related information stored digitally.

The PornHub breach illustrates why this matters. Your firm's email system, document management platform, and client portals contain information criminals actively target. The "reasonable efforts" analysis considers the sensitivity of information, likelihood of disclosure without additional safeguards, cost of safeguards, and difficulty of implementation. For most firms, this means mandatory multi-factor authentication (MFA) on all systems, encryption for data at rest and in transit, and secure file-sharing platforms instead of email attachments.

You must also address third-party vendor access under Rule 1.6. When you grant a case management platform access to client data, you remain ethically responsible for protecting that information. Your engagement letters should specify security expectations, and vendor contracts must include confidentiality obligations and breach notification requirements.

ABA Model Rules 5.1 and 5.3: Supervisory Responsibilities Extend to Technology

lawyers need to stay up to date on the security protocOls for their firm’s software!

Rule 5.1 imposes duties on partners and supervisory lawyers to ensure the firm has measures giving "reasonable assurance that all lawyers in the firm conform to the Rules of Professional Conduct". Rule 5.3 extends this duty to nonlawyer assistants, which courts and ethics opinions have interpreted to include technology vendors and cloud service providers.

If you manage a firm or supervise other lawyers, you must implement technology policies and training programs. This includes security awareness training, password management requirements, and incident reporting procedures. You cannot assume your younger associates understand cybersecurity best practices—they need explicit training and clear policies.

For nonlawyer assistance, you must "make reasonable efforts to ensure that the person's conduct is compatible with the professional obligations of the lawyer". This means vetting your IT providers, requiring them to maintain appropriate security certifications, and ensuring they understand their confidentiality obligations. Your vendor management program is an ethical requirement, not just a business best practice.

ABA Formal Opinion 483: Data Breach Response Requirements

ABA Formal Opinion 483 establishes clear obligations when a data breach occurs. Lawyers have a duty to monitor for breaches, stop and mitigate damage promptly, investigate what occurred, and notify affected clients. This duty arises from Rules 1.1 (competence), 1.6 (confidentiality), and 1.4 (communication).

The Opinion requires you to have a written incident response plan before a breach occurs. Your plan must identify who will coordinate the response, how you will communicate with affected clients (including backup communication methods if email is compromised), and what steps you will take to assess and remediate the breach. You must document what data was accessed, whether malware was used, and whether client information was taken, altered, or destroyed.

Notification to clients is mandatory when a breach involves material client confidential information. The notification must be prompt and include what happened, what information was involved, what you are doing in response, and what clients should do to protect themselves. This duty extends to former clients in many circumstances, as their files may still contain sensitive information subject to state data breach laws.

Three Security Domains: Personal, Practice, and Client Protection

Your Law Practice's Security

Under Rules 5.1 and 5.3, you must implement reasonable security measures throughout your firm. Conduct annual cybersecurity risk assessments. Require MFA on all systems. Implement data minimization principles—only share what vendors absolutely need. Establish incident response protocols before breaches occur. Your supervisory duties require you to ensure that all firm personnel, including non-lawyer staff, understand and follow the firm's security policies.

Client Security Obligations

Rule 1.4 requires you to keep clients reasonably informed, which includes advising them on security matters relevant to their representation. Clients experiencing sextortion need immediate, informed guidance. Preserve all threatening emails with headers intact. Document timestamps and demands. Advise clients never to pay or respond—payment confirms active monitoring and often leads to additional demands. Report incidents to the FBI's IC3 unit and local cybercrime divisions. For family law practitioners, understand that sextortion often targets vulnerable individuals during contentious proceedings. Criminal defense attorneys must recognize these threats as extortion, not embarrassment issues. Your competence under Rule 1.1 requires you to understand these threats well enough to provide effective guidance.

Personal Digital Hygiene

Your personal email account is your digital identity's master key. Enable MFA on all professional and personal accounts. Use unique, complex passwords managed through a password manager. Consider pseudonymous email addresses for sensitive subscriptions. Separate your litigation communications from personal browsing activities. The STOP framework applies: Slow down, Test suspicious contacts, Opt out of high-pressure conversations, and Prove identities through independent channels. Your personal security failures can compromise your professional obligations under Rule 1.6.

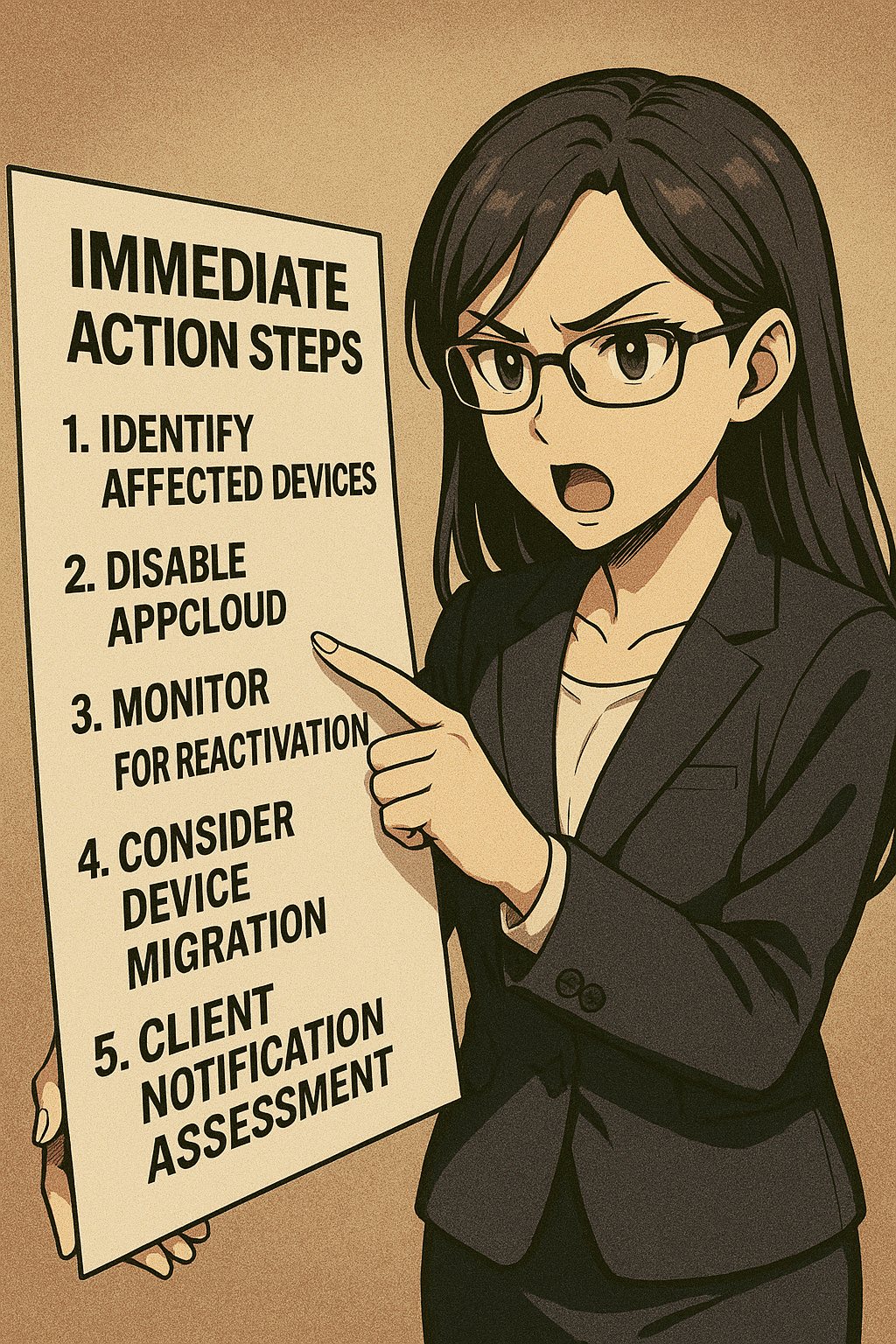

Practical Implementation Steps

THere are five Practical Implementation Steps lawyers can do today to get their practice cyber compliant!

First, conduct a technology audit to map every system that stores or accesses client information. Identify all third-party vendors and assess their security practices against industry standards.

Second, implement MFA across all systems immediately—this is one of the most effective and cost-efficient security controls available.

Third, develop written security policies covering password management, device encryption, remote work procedures, and incident response.

Fourth, train all firm personnel on these policies and conduct simulated phishing exercises to test awareness.

Fifth, review and update your engagement letters to include technology provisions and breach notification procedures.

Conclusion

The PornHub breach is not an isolated incident—it is a template for how modern attacks occur through third-party vendors. Your ethical duties under ABA Model Rules require proactive cybersecurity measures, not reactive responses after a breach. Technology competence under Rule 1.1, confidentiality protection under Rule 1.6, supervisory responsibilities under Rules 5.1 and 5.3, and breach response obligations under Formal Opinion 483 together create a comprehensive framework for protecting your practice and your clients. Cybersecurity is no longer an IT issue delegated to consultants; it is a core professional competency that affects your license to practice law. The time to act is before your firm appears in a breach notification headline.