When the companies behind your CRM and AI research tools start suing each other, the dispute is not just “tech industry drama” — it can reshape the practical and ethical foundations of your practice. At a basic to moderate level, the Clio–Alexi fight is about who controls valuable legal data, how that data can be used to power AI tools, and whether one side is using its market position unfairly. Clio (a major practice‑management and CRM platform) is tied to legal research tools and large legal databases. Alexi is a newer AI‑driven research company that depends on access to caselaw and related materials to train and deliver its products. In broad strokes, one side claims the other misused or improperly accessed data and technology; the other responds that the litigation is “sham” or anticompetitive, designed to limit a smaller rival and protect a dominant ecosystem. There are allegations around trade secrets, data licensing, and antitrust‑style behavior. None of that may sound like your problem — until you remember that your client data, workflows, and deadlines live inside tools these companies own, operate, or integrate with.

For lawyers with limited to moderate technology skills, you do not need to decode every technical claim in the complaints and counterclaims. You do, however, need to recognize that vendor instability, lawsuits, and potential regulatory scrutiny can directly touch: your access to client files and calendars, the confidentiality of matter information stored in the cloud, and the long‑term reliability of the systems you use to serve clients and get paid. Once you see the dispute in those terms, it becomes squarely an ethics, risk‑management, and governance issue — not just “IT.”

ABA Model Rule 1.1: Competence Now Includes Tech and Vendor Risk

Model Rule 1.1 requires “competent representation,” which includes the legal knowledge, skill, thoroughness, and preparation reasonably necessary for the representation. In the modern practice environment, that has been interpreted to include technology competence. That does not mean you must be a programmer. It does mean you must understand, in a practical way, the tools on which your work depends and the risks they bring.

If your primary CRM, practice‑management system, or AI research tool is operated by a company in serious litigation about data, licensing, or competition, that is a material fact about your environment. Competence today includes: knowing which mission‑critical workflows rely on that vendor (intake, docketing, conflicts, billing, research, etc.); having at least a baseline sense of how vendor instability could disrupt those workflows; and building and documenting a plan for continuity — how you would move or access data if the worst‑case scenario occurred (for example, a sudden outage, injunction, or acquisition). Failing to consider these issues can undercut the “thoroughness and preparation” the Rule expects. Even if your firm is small or mid‑sized, and even if you feel “non‑technical,” you are still expected to think through these risks at a reasonable level.

ABA Model Rule 1.6: Confidentiality in a Litigation Spotlight

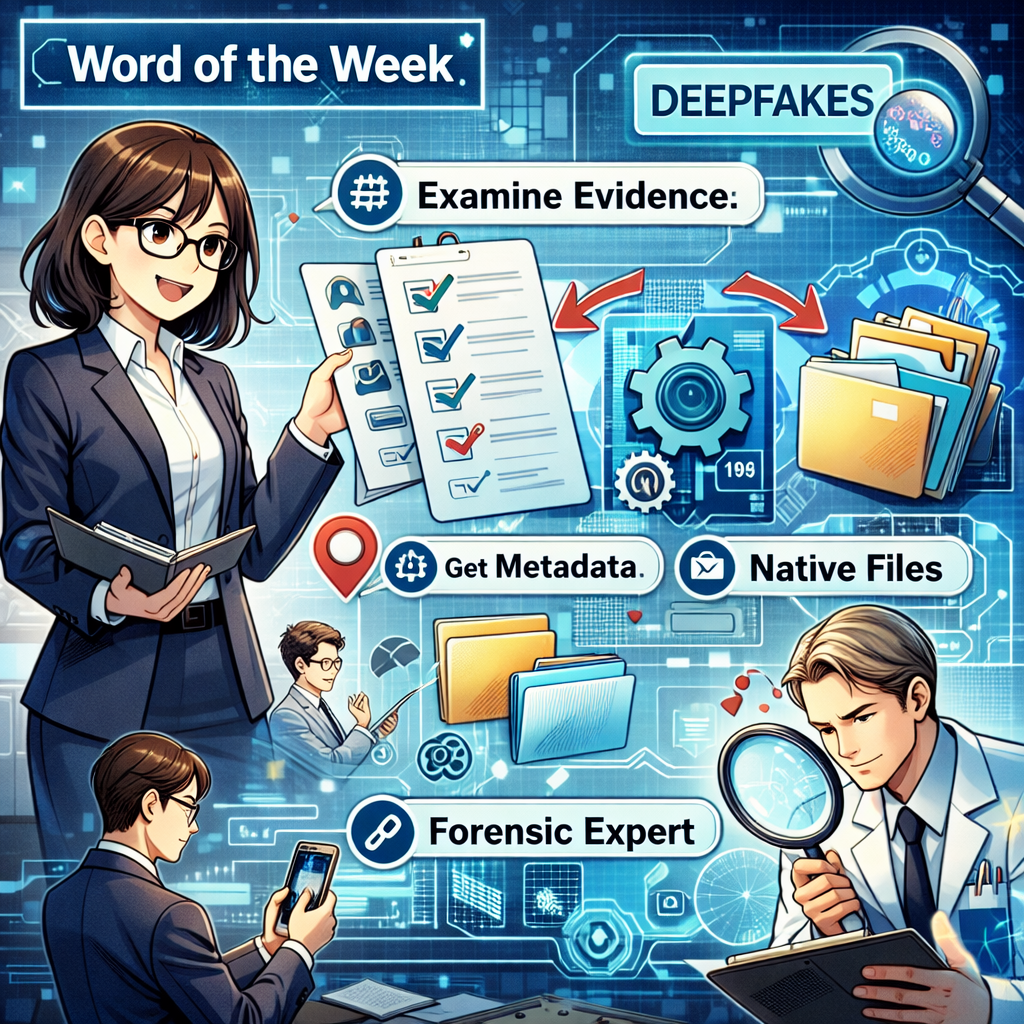

Model Rule 1.6 is often front of mind when lawyers think about cloud tools, and the Clio–Alexi dispute reinforces why. When a technology company is sued, its systems may become part of discovery. That raises questions like: what types of client‑related information (names, contact details, matter descriptions, notes, uploaded files) reside on those systems; under what circumstances that information could be accessed, even in redacted or aggregate form, by litigants, experts, or regulators; and how quickly and completely you can remove or export client data if a risk materializes.

You remain the steward of client confidentiality, even when data is stored with a third‑party provider. A reasonable, non‑technical but diligent approach includes: understanding where your data is hosted (jurisdictions, major sub‑processors, data‑center regions); reviewing your contracts or terms of service for clauses about data access, subpoenas, law‑enforcement or regulatory requests, and notice to you; and ensuring you have clearly defined data‑export rights — not only if you voluntarily leave, but also if the vendor is sold, enjoined, or materially disrupted by litigation. You are not expected to eliminate all risk, but you are expected to show that you considered how vendor disputes intersect with your duty to protect confidential information.

ABA Model Rule 5.3: Treat Vendors as Supervised Non‑Lawyer Assistants