📖 WORD OF THE WEEK (WoW): Zero Trust Architecture ⚖️🔐

/Zero Trust Architecture and ABA Model Rules Compliance 🛡️

Lawyers need to "never trust, always verify" their network activity!

Zero Trust Architecture represents a fundamental shift in how law firms approach cybersecurity and fulfill ethical obligations. Rather than assuming that users and devices within a firm's network are trustworthy by default, this security model operates on the principle of "never trust, always verify." For legal professionals managing sensitive client information, implementing this framework has become essential to protecting confidentiality while maintaining compliance with ABA Model Rules.

The traditional security approach created a protective perimeter around a firm's network, trusting anyone inside that boundary. This model no longer reflects modern legal practice. Remote work, cloud-based case management systems, and mobile device usage mean that your firm's data exists across multiple locations and devices. Zero Trust abandons the perimeter-based approach entirely.

ABA Model Rule 1.6(c) requires lawyers to "make reasonable efforts to prevent the inadvertent or unauthorized disclosure of, or unauthorized access to, information relating to the representation of a client." Zero Trust Architecture directly fulfills this mandate by requiring continuous verification of every user and device accessing firm resources, regardless of location. This approach ensures compliance with the confidentiality duty that forms the foundation of legal practice.

Core Components Supporting Your Ethical Obligations

Zero Trust Architecture operates through three interconnected principles aligned with ABA requirements.

legal professionals do you know the core components of modern cyber security?

Continuous verification means that authentication does not happen once at login. Instead, systems continuously validate user identity, device health, and access context in real time.

Least privilege access restricts each user to only the data and systems necessary for their specific role. An associate working on discovery does not need access to billing systems, and a paralegal in real estate does not need access to litigation files.

Micro-segmentation divides your network into smaller, secure zones. This prevents lateral movement, which means that if a bad actor compromises one device or user account, they cannot automatically access all firm systems.

ABA Model Rule 1.1, Comment 8 requires that lawyers maintain competence, including competence in "the benefits and risks associated with relevant technology." Understanding Zero Trust Architecture demonstrates that your firm maintains technological competence in cybersecurity matters. Additional critical components include multi-factor authentication, which requires users to verify their identity through multiple methods before accessing systems. Device authentication ensures that only approved and properly configured devices can connect to firm resources. End-to-end encryption protects data both at rest and in transit.

ABA Model Rule 1.4 requires lawyers to keep clients "reasonably informed about significant developments relating to the representation." Zero Trust Architecture supports this duty by protecting client information and enabling prompt client notification if security incidents occur.

ABA Model Rules 5.1 and 5.3 require supervisory lawyers and managers to ensure that subordinate lawyers and non-lawyer staff comply with professional obligations. Implementing Zero Trust creates the framework for effective supervision of cybersecurity practices across your entire firm.

Addressing Safekeeping Obligations

ABA Model Rule 1.15 requires lawyers to "appropriately safeguard" property of clients, including electronic information. Zero Trust Architecture provides the security infrastructure necessary to meet this safekeeping obligation. This rule mandates maintaining complete records of client property and preserving those records. Zero Trust's encryption and access controls ensure that stored records remain protected from unauthorized access.

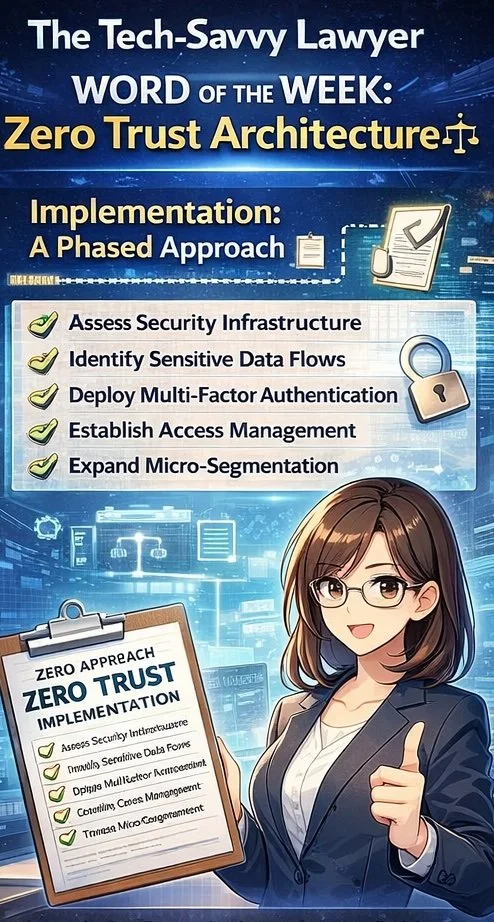

Implementation: A Phased Approach 📋

Implementing Zero Trust need not happen all at once. Begin by assessing your current security infrastructure and identifying sensitive data flows. Establish identity and access management systems to control who accesses what. Deploy multi-factor authentication across all applications. Then gradually expand micro-segmentation and monitoring capabilities as your systems mature. Document your efforts to demonstrate compliance with ABA Model Rule 1.6(c)'s requirement for "reasonable efforts."

Final Thoughts

Zero Trust Architecture transforms your firm's security posture from reactive protection to proactive verification while ensuring compliance with essential ABA Model Rules. For legal practices handling confidential client information, this security framework is not optional. It protects your clients, your firm's reputation, and your ability to practice law with integrity.