🎙️ Ep. 117: Legal Tech Revolution, How Dorna Moini Built Gavel.ai to Transform the Practice of Law with AI and Automation.

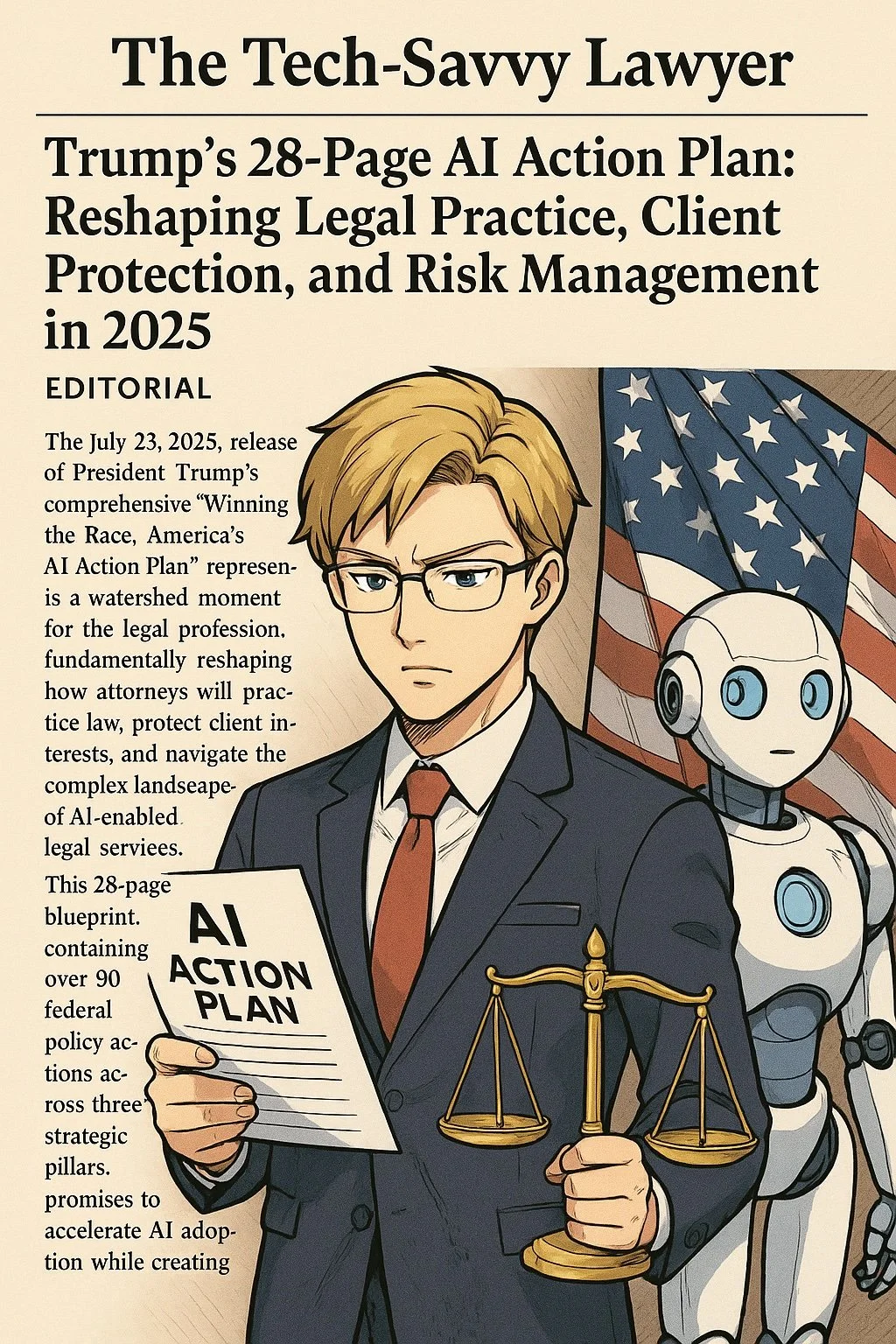

/Dorna Moini, CEO and Founder of Gavel, discusses how generative AI is transforming the way legal professionals work. She explains how Gavel helps lawyers automate their work, save time, and reach more clients without needing to know how to code. In the conversation, she shares the top three ways AI has improved Gavel's tools and operations. She also highlights the most significant security risks that lawyers should be aware of when using AI tools. Lastly, she provides simple tips to ensure AI-generated results are accurate and reliable, as well as how to avoid false or misleading information.

Join Dorna and me as we discuss the following three questions and more!

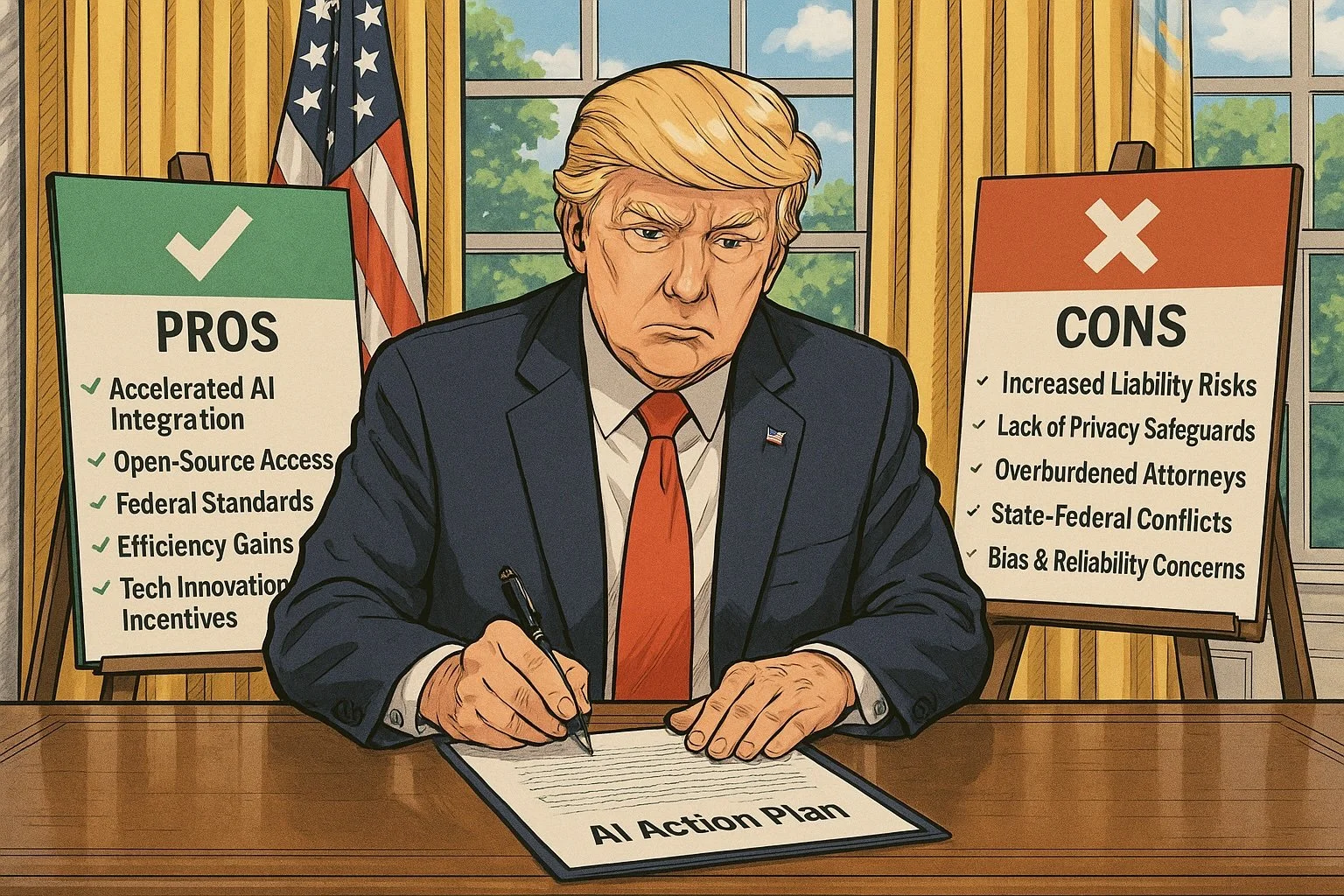

What are the top three ways generative AI has transferred Gavel's offerings and operations?

What are the top three most critical security concerns legal professionals should be aware of when using AI-integrated products like Gavel?

What are the top three ways to ensure the accuracy and reliability of AI-generated results, including measures to prevent false or misleading information or hallucinations?

In our conversation, we cover the following:

[01:16] Dorna's Tech Setup and Upgrades

[03:56] Discussion on Computer and Smartphone Upgrades

[08:31] Exploring Additional Tech and Sleeping Technology

[09:32] Generative AI's Impact on Gavel's Operations

[13:13] Critical Security Concerns in AI-Integrated Products

[16:44] Playbooks and Redline Capabilities in Gavel Exec

[20:45] Contact Information

Resources:

Connect with Dorna:

Instagram: instagram.com/dorna_at_gavel

LinkedIn: linkedin.com/in/documentautomation/

Website:gavel.io/

Websites & SaaS Products:

Apple Podcasts — Podcast platform (for reviews)

Apple Podcasts — Podcast platform (for reviews)

ChatGPT — AI conversational assistant by OpenAI

ChatGPT — AI conversational assistant by OpenAI

Gavel — AI-powered legal automation platform (formerly Documate)

Gavel Exec — AI assistant for legal document review and redlining (part of Gavel)

MacRumors — Apple news and product cycle information

MacRumors — Apple news and product cycle information

Notion — Workspace for notes, databases, and project management

Notion — Workspace for notes, databases, and project management

Slack — Team communication and collaboration platform

Hardware:

Apple iPhone 15 — Smartphone

Apple Mac Mini — Compact desktop computer

Apple Mac Studio — Desktop computer

Apple MacBook Pro — Laptop computer

Eight Sleep — Smart mattress and sleep technology

Other:

Apple Business Account — For business purchases and trade-ins