MTC: AI Governance Crisis - What Every Law Firm Must Learn from 1Password's Eye-Opening Security Research

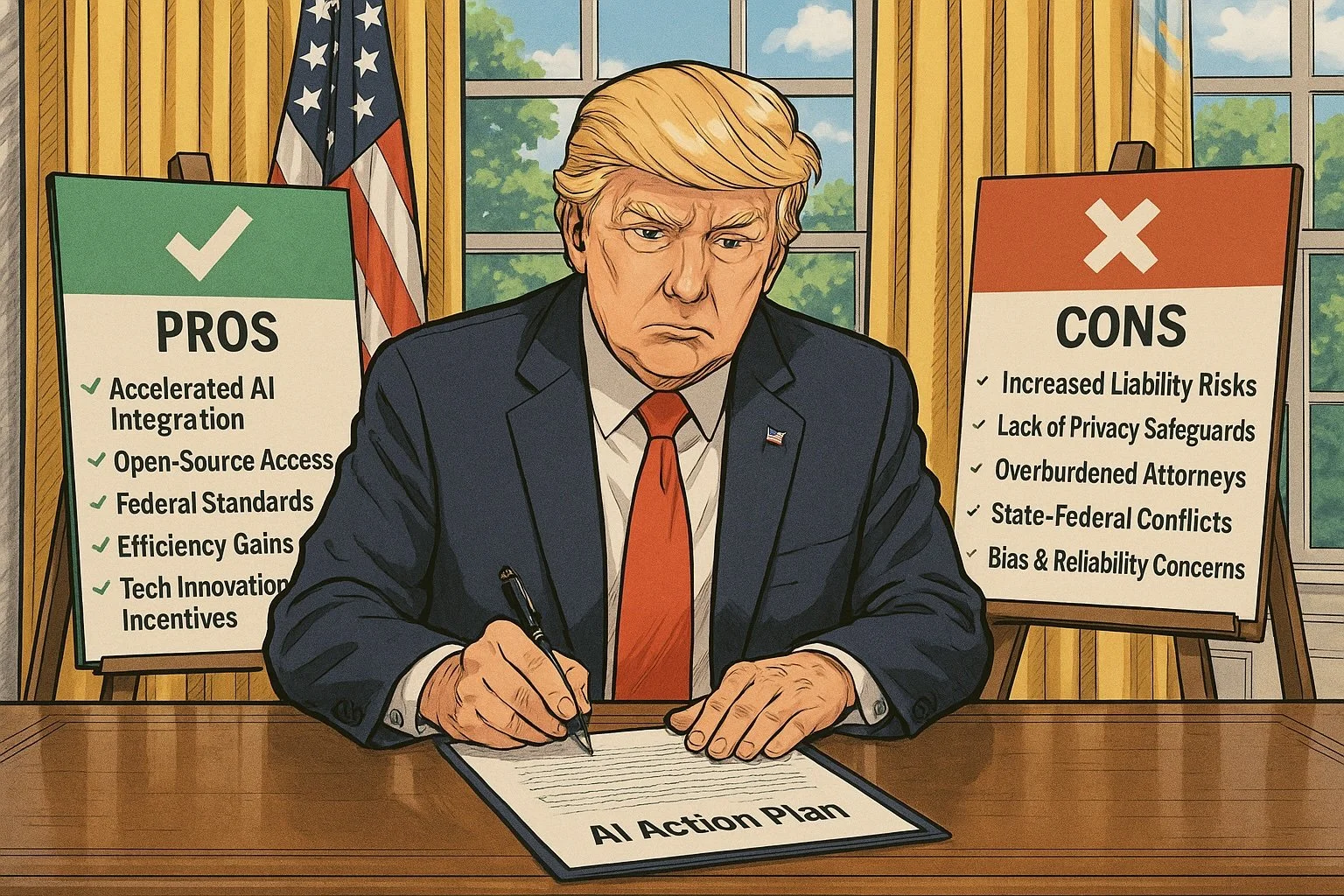

/The legal profession stands at a crossroads. Recent research commissioned by 1Password reveals four critical security challenges that should serve as a wake-up call for every law firm embracing artificial intelligence. With 79% of legal professionals now using AI tools in some capacity while only 10% of law firms have formal AI governance policies, the disconnect between adoption and oversight has created unprecedented vulnerabilities that could compromise client confidentiality and professional liability.

The Invisible AI Problem in Law Firms

The 1Password study's most alarming finding mirrors what law firms are experiencing daily: only 21% of security leaders have full visibility into AI tools used in their organizations. This visibility gap is particularly dangerous for law firms, where attorneys and staff may be uploading sensitive client information to unauthorized AI platforms without proper oversight.

Dave Lewis, Global Advisory CISO at 1Password, captured the essence of this challenge perfectly: "We have closed the door to AI tools and projects, but they keep coming through the window!" This sentiment resonates strongly with legal technology experts who observe attorneys gravitating toward consumer AI tools like ChatGPT for legal research and document drafting, often without understanding the data security implications.

The parallel to law firm experiences is striking. Recent Stanford HAI research revealed that even professional legal AI tools produce concerning hallucination rates—Westlaw AI-Assisted Research showed a 34% error rate, while Lexis+ AI exceeded 17%. (Remember my editorial/bolo MTC/🚨BOLO🚨: Lexis+ AI™️ Falls Short for Legal Research!) These aren't consumer chatbots but professional tools marketed to law firms as reliable research platforms.

Four Critical Lessons for Legal Professionals

First, establish comprehensive visibility protocols. The 1Password research shows that 54% of security leaders admit their AI governance enforcement is weak, with 32% believing up to half of employees continue using unauthorized AI applications. Law firms must implement SaaS governance tools to identify AI usage across their organization and document how employees are actually using AI in their workflows.

Second, recognize that good intentions create dangerous exposures. The study found that 63% of security leaders believe the biggest internal threat is employees unknowingly giving AI access to sensitive data. For law firms handling privileged attorney-client communications, this risk is exponentially greater. Staff may innocently paste confidential case details into AI tools, potentially violating client confidentiality rules and creating malpractice liability.

Third, address the unmanaged AI crisis immediately. More than half of security leaders estimate that 26-50% of their AI tools and agents are unmanaged. In legal practice, this could mean AI agents are interacting with case management systems, client databases, or billing platforms without proper access controls or audit trails—a compliance nightmare waiting to happen.

Fourth, understand that traditional security models are inadequate. The research emphasizes that conventional identity and access management systems weren't designed for AI agents. Law firms must evolve their access governance strategies to include AI tools and create clear guidelines for how these systems should be provisioned, tracked, and audited.

Beyond Compliance: Strategic Imperatives

The American Bar Association's Formal Opinion 512 established clear ethical frameworks for AI use, but compliance requires more than policy documents. Law firms need proactive strategies that enable AI benefits while protecting client interests.

Effective AI governance starts with education. Most legal professionals aren't thinking about AI security risks in these terms. Firms should conduct workshops and tabletop exercises to walk through potential scenarios and develop incident response protocols before problems arise.

The path forward doesn't require abandoning AI innovation. Instead, it demands extending trust-based security frameworks to cover both human and machine identities. Law firms must implement guardrails that protect confidential information without slowing productivity—user-friendly systems that attorneys will actually follow.

Final Thoughts: The Competitive Advantage of Responsible AI Adoption

Firms that proactively address these challenges will gain significant competitive advantages. Clients increasingly expect their legal counsel to use technology responsibly while maintaining the highest security standards. Demonstrating comprehensive AI governance builds trust and differentiates firms in a crowded marketplace.

The research makes clear that security leaders are aware of AI risks but under-equipped to address them. For law firms, this awareness gap represents both a challenge and an opportunity. Practices that invest in proper AI governance now will be positioned to leverage these powerful tools confidently while their competitors struggle with ad hoc approaches.

The legal profession's relationship with AI has fundamentally shifted from experimental adoption to enterprise-wide transformation. The 1Password research provides a roadmap for navigating this transition securely. Law firms that heed these lessons will thrive in the AI-augmented future of legal practice.

MTC